CLOUD COMPUTING SECURITY

“An essential guide to

securing the cloud.

Organizations running

workloads at the

scale their customers

demand need cloud

security. This book

contains essential

recipes that will set

them up for success

and allow them to scale

securely.“

—JK Gunnink

Google Cloud developer expert

“A must-read for anyone

working or trying to

get into cloud security.

Josh does a great

job of showing how

to implement various

components of a secure

cloud environment all

through the power of

Terraform.”

—Marcus Maxwell

Security Practice Lead, Contino

Cloud Native Security Cookbook

ISBN: 9781098106300

US $59.99 CAN $74.99

Twitter: @oreillymedia

linkedin.com/company/oreilly-media

youtube.com/oreillymedia

With the rise of the cloud, every aspect of IT has been

shaken to its core. The fundamentals for building systems are

changing, and although many of the principles that underpin

security still ring true, their implementation has become

unrecognizable. This practical book provides recipes for AWS,

Azure, and GCP to help you enhance the security of your own

cloud native systems.

Based on his hard-earned experience working with some of

the world’s biggest enterprises and rapidly iterating startups,

consultant Josh Armitage covers the trade-os that security

professionals, developers, and infrastructure gurus need to

make when working with dierent cloud providers. Each recipe

discusses the inherent compromises, as well as where clouds

have similarities and where they’re fundamentally dierent.

• Learn how the cloud provides superior security to what was

achievable in an on-premises world

• Understand the principles and mental models that enable

you to make optimal trade-os as part of your solution

• Learn how to implement existing solutions that are robust

and secure, and devise design solutions to new and

interesting problems

• Deal with security challenges and solutions both horizontally

and vertically within your business

Josh Armitage has been plying his trade as a consultant to

enterprises and startups for many years. He’s seen security from

many angles and has wide and deep technology expertise that

includes writing production assembly on mainframes and operating

a globally distributed machine learning system. Josh now focuses

on cloud native technologies, lean software development, and

taking teams through DevSecOps transformations.

ISBN: 978-1-492-09852-2

Josh Armitage

Cloud Native Security Cookbook

Recipes for a Secure Cloud

978-1-492-09852-2

[LSI]

Cloud Native Security Cookbook

by Josh Armitage

Copyright © 2022 Joshua Hagen Armitage. All rights reserved.

Printed in the United States of America.

Published by O’Reilly Media, Inc., 1005 Gravenstein Highway North, Sebastopol, CA 95472.

O’Reilly books may be purchased for educational, business, or sales promotional use. Online editions are

also available for most titles (http://oreilly.com). For more information, contact our corporate/institutional

sales department: 800-998-9938 or corporate@oreilly.com.

Acquisitions Editor: Jennifer Pollock

Development Editor: Corbin Collins

Production Editor: Jonathon Owen

Copyeditor: Sonia Saruba

Proofreader: Piper Editorial Consulting, LLC

Indexer: Judith McConville

Interior Designer: David Futato

Cover Designer: Karen Montgomery

Illustrator: Kate Dullea

April 2022: First Edition

Revision History for the First Edition

2022-04-20: First Release

See http://oreilly.com/catalog/errata.csp?isbn=9781098106300 for release details.

The O’Reilly logo is a registered trademark of O’Reilly Media, Inc. Cloud Native Security Cookbook, the

cover image, and related trade dress are trademarks of O’Reilly Media, Inc.

The views expressed in this work are those of the author and do not represent the publisher’s views. While

the publisher and the author have used good faith efforts to ensure that the information and instructions

contained in this work are accurate, the publisher and the author disclaim all responsibility for errors or

omissions, including without limitation responsibility for damages resulting from the use of or reliance

on this work. Use of the information and instructions contained in this work is at your own risk. If any

code samples or other technology this work contains or describes is subject to open source licenses or the

intellectual property rights of others, it is your responsibility to ensure that your use thereof complies

with such licenses and/or rights.

This work is part of a collaboration between O’Reilly and Palo Alto Networks. See our statement of edito‐

rial independence.

Table of Contents

Preface. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . ix

1.

Security in the Modern Organization. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

1.1 Why Security Is Critical 1

1.2 What Is Meant by Cloud Native Security? 3

1.3 Where Security Fits in the Modern Organization 5

1.4 The Purpose of Modern Security 7

1.5 DevSecOps 7

1.6 How to Measure the Impact of Security 12

1.7 The Principles of Security 14

2.

Setting Up Accounts and Users. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

2.1 Scalable Project Structures on GCP 19

2.2 Scalable Account Structures on AWS 27

2.3 Scalable Subscription Structures on Azure 35

2.4 Region Locking on GCP 40

2.5 Region Locking on AWS 43

2.6 Region Locking on Azure 47

2.7 Centralizing Users on GCP 49

2.8 Centralizing Users on AWS 54

2.9 Centralizing Users on Azure 58

3.

Getting Security Visibility at Scale. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

3.1 Building a Cloud Native Security Operations Center on GCP 64

3.2 Building a Cloud Native Security Operations Center on AWS 71

3.3 Building a Cloud Native Security Operations Center on Azure 75

3.4 Centralizing Logs on GCP 78

3.5 Centralizing Logs on AWS 82

v

3.6 Centralizing Logs on Azure 88

3.7 Log Anomaly Alerting on GCP 94

3.8 Log Anomaly Alerting on AWS 98

3.9 Log Anomaly Alerting on Azure 102

3.10 Building an Infrastructure Registry on GCP 106

3.11 Building an Infrastructure Registry on AWS 110

3.12 Building an Infrastructure Registry on Azure 118

4.

Protecting Your Data. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 123

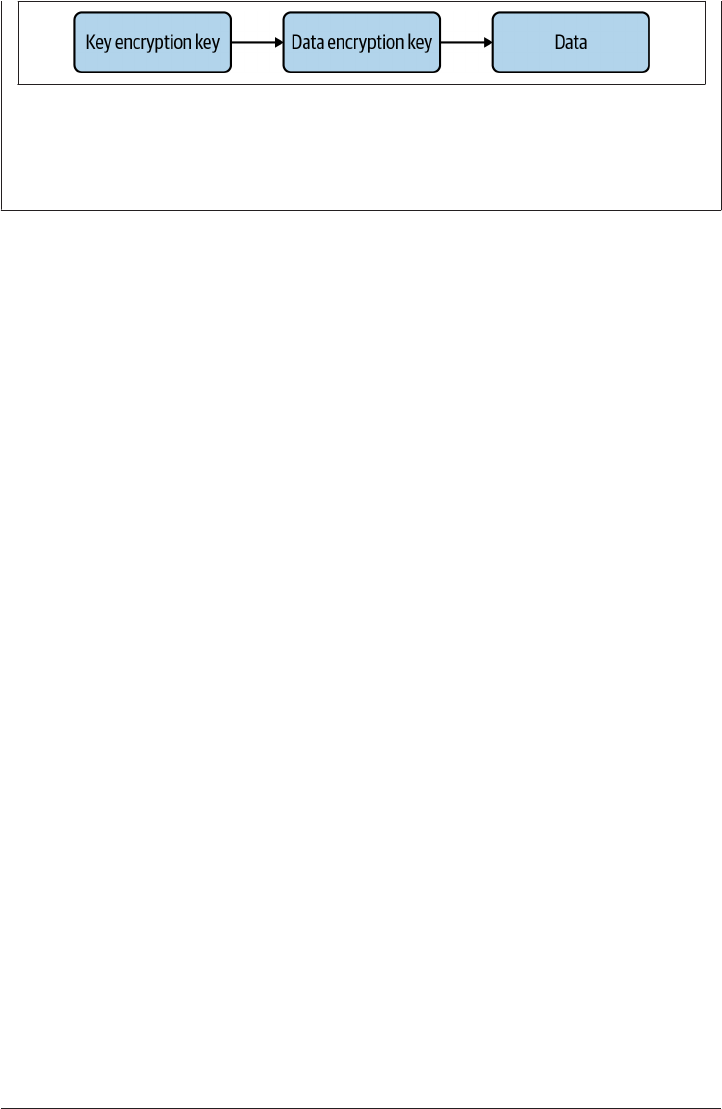

4.1 Encrypting Data at Rest on GCP 124

4.2 Encrypting Data at Rest on AWS 129

4.3 Encrypting Data at Rest on Azure 137

4.4 Encrypting Data on GCP with Your Own Keys 143

4.5 Encrypting Data on AWS with Your Own Keys 147

4.6 Encrypting Data on Azure with Your Own Keys 151

4.7 Enforcing In-Transit Data Encryption on GCP 156

4.8 Enforcing In-Transit Data Encryption on AWS 160

4.9 Enforcing In-Transit Data Encryption on Azure 162

4.10 Preventing Data Loss on GCP 165

4.11 Preventing Data Loss on AWS 170

4.12 Preventing Data Loss on Azure 174

5.

Secure Networking. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 181

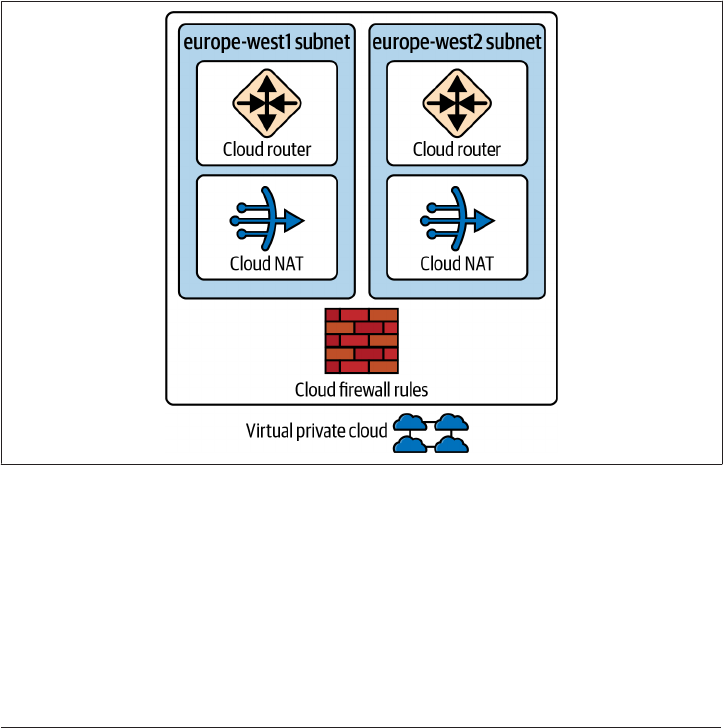

5.1 Networking Foundations on GCP 182

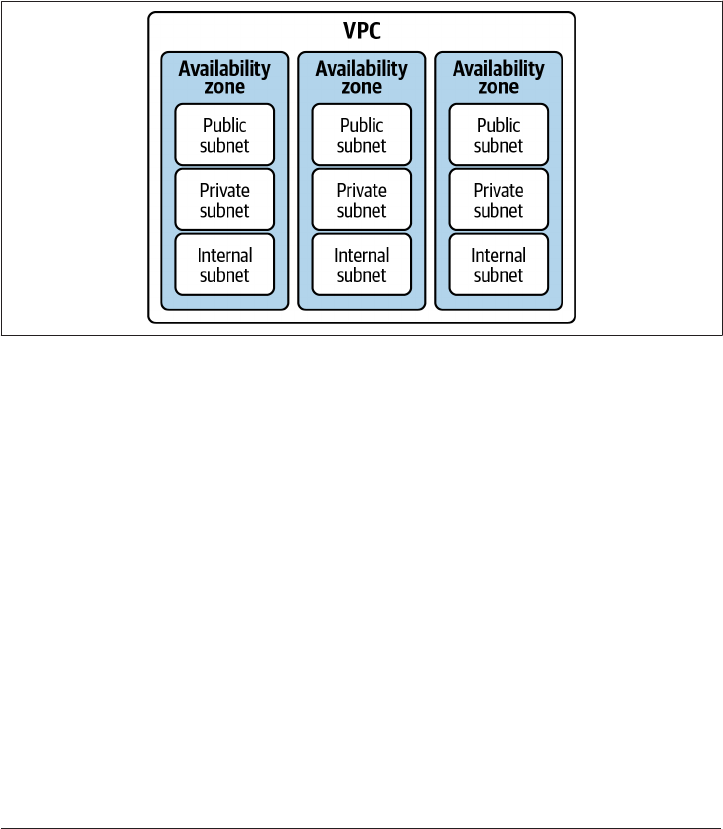

5.2 Networking Foundations on AWS 188

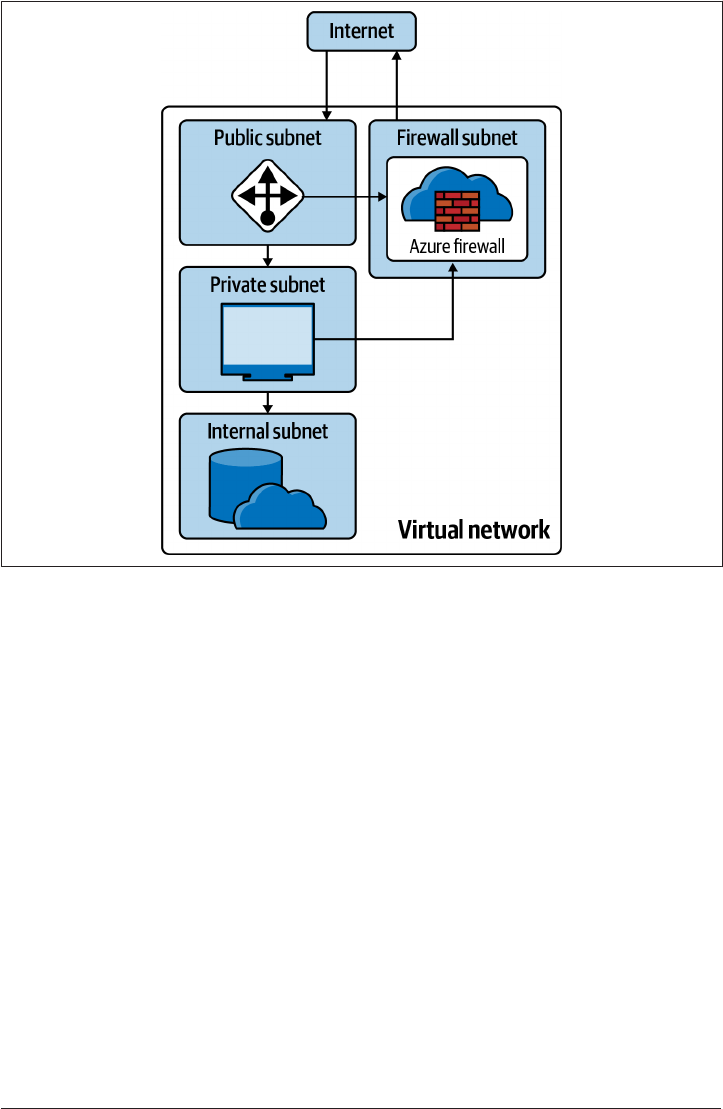

5.3 Networking Foundations on Azure 195

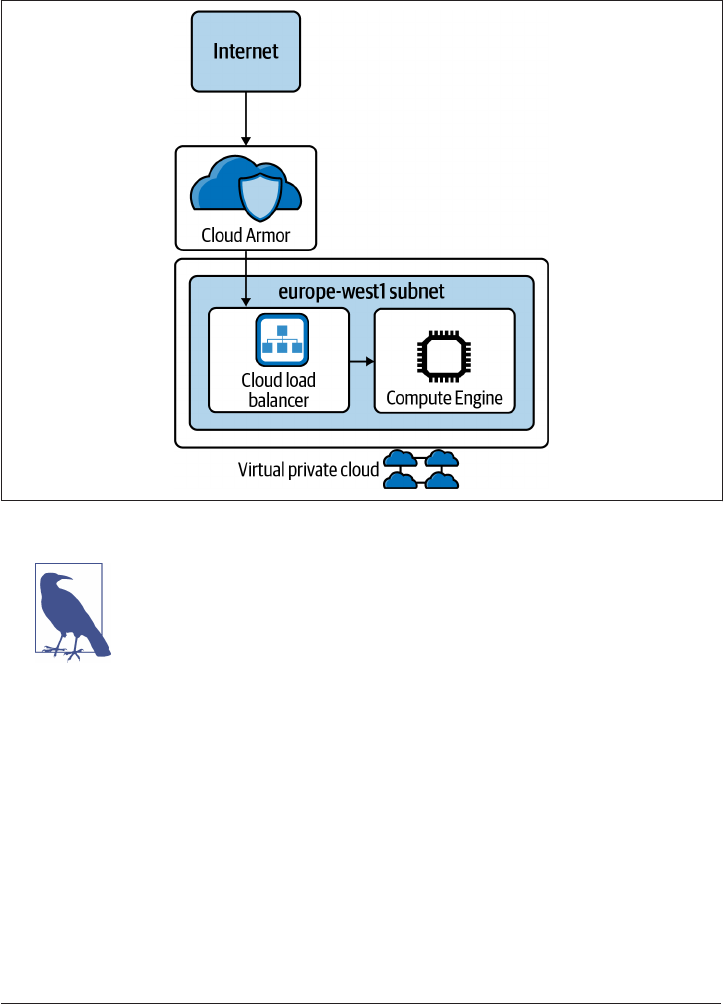

5.4 Enabling External Access on GCP 203

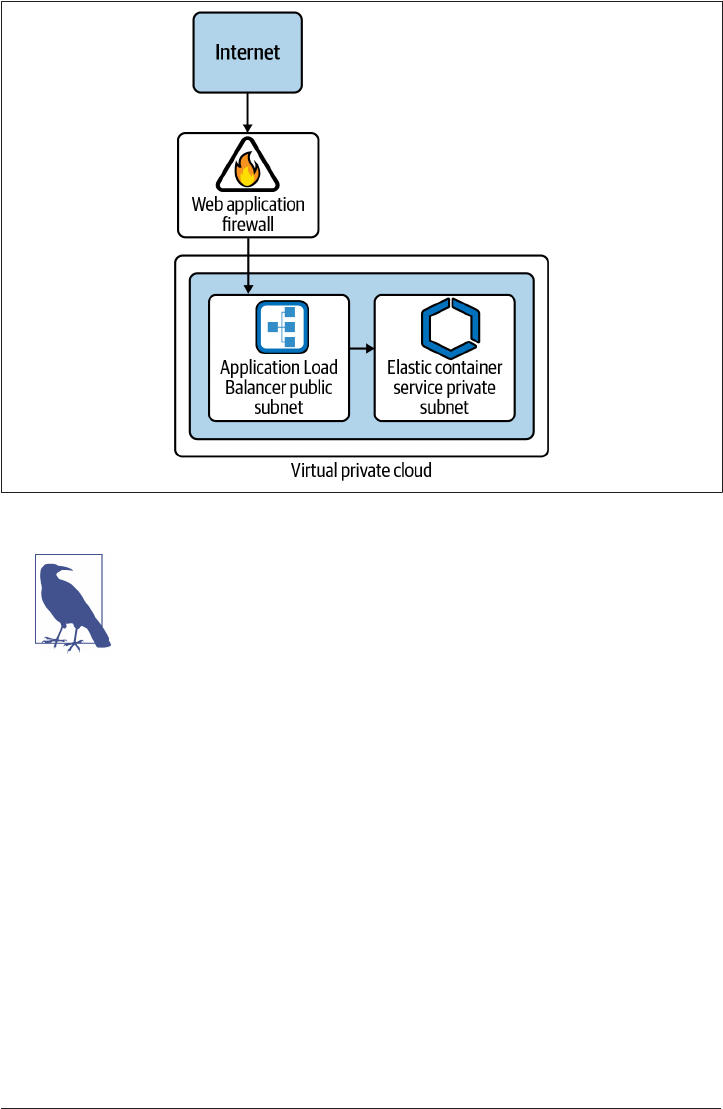

5.5 Enabling External Access on AWS 208

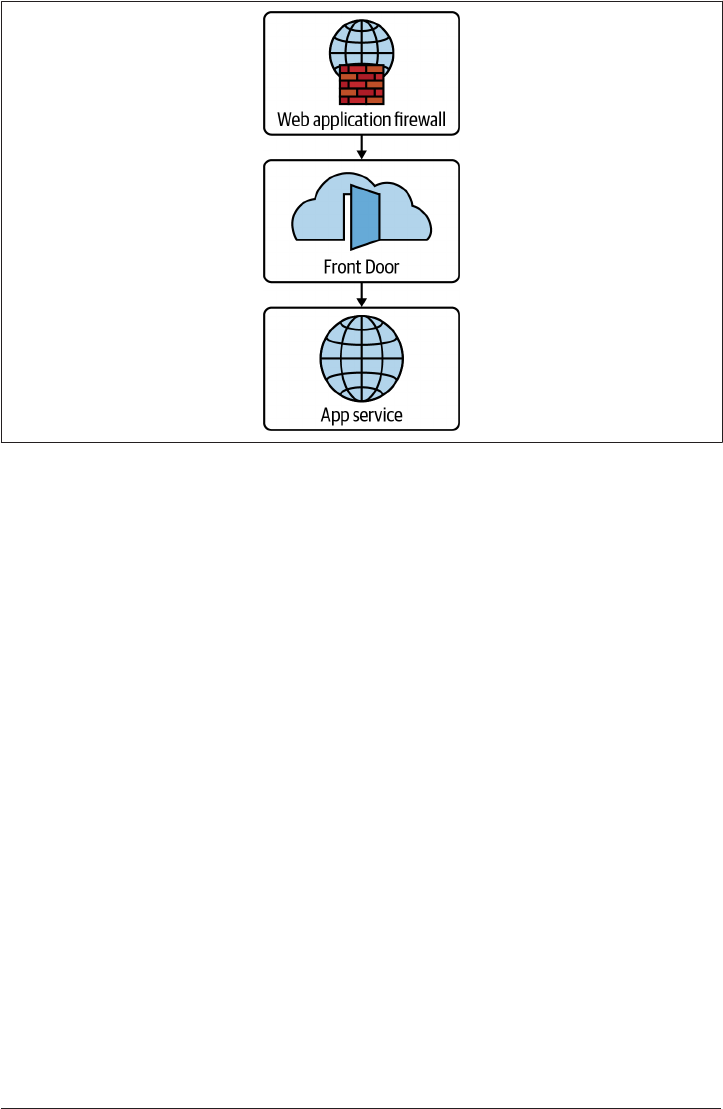

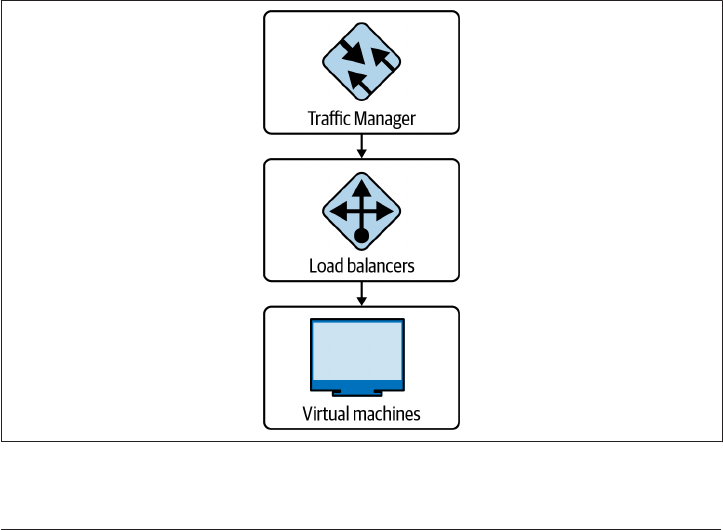

5.6 Enabling External Access on Azure 214

5.7 Allowing Access to Internal Resources on GCP 219

5.8 Allowing Access to Internal Resources on AWS 225

5.9 Allowing Access to Internal Resources on Azure 231

5.10 Controlling External Network Connectivity on GCP 236

5.11 Controlling External Network Connectivity on AWS 243

5.12 Controlling External Network Connectivity on Azure 251

5.13 Private Application Access on GCP 257

5.14 Private Application Access on AWS 265

5.15 Private Application Access on Azure 272

6.

Infrastructure as Code. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 277

6.1 Building Secure Infrastructure Defaults on GCP 278

6.2 Building Secure Infrastructure Defaults on AWS 282

vi | Table of Contents

6.3 Building Secure Infrastructure Defaults on Azure 288

6.4 Functions as a Service on GCP 294

6.5 Functions as a Service on AWS 299

6.6 Functions as a Service on Azure 303

6.7 Robust Deployment on GCP 309

6.8 Robust Deployment on AWS 314

6.9 Robust Deployment on Azure 322

6.10 Deployment at Scale on GCP 329

6.11 Deployment at Scale on AWS 331

6.12 Deployment at Scale on Azure 336

7.

Compliance as Code. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 341

7.1 Labeling Resources on GCP 342

7.2 Tagging Resources on AWS 347

7.3 Tagging Resources on Azure 352

7.4 Detecting Noncompliant Infrastructure on GCP 357

7.5 Detecting Noncompliant Infrastructure on AWS 364

7.6 Detecting Noncompliant Infrastructure on Azure 369

7.7 Preventing Noncompliant Infrastructure on GCP 375

7.8 Preventing Noncompliant Infrastructure on AWS 379

7.9 Preventing Noncompliant Infrastructure on Azure 383

7.10 Remediating Noncompliant Infrastructure on GCP 388

7.11 Remediating Noncompliant Infrastructure on AWS 396

7.12 Remediating Noncompliant Infrastructure on Azure 400

8.

Providing Internal Security Services. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 407

8.1 Protecting Security Assets and Controls on GCP 408

8.2 Protecting Security Assets and Controls on AWS 412

8.3 Protecting Security Assets and Controls on Azure 417

8.4 Understanding Machine Status at Scale on GCP 422

8.5 Understanding Machine Status at Scale on AWS 426

8.6 Understanding Machine Status at Scale on Azure 430

8.7 Patching at Scale on GCP 435

8.8 Patching at Scale on AWS 439

8.9 Patching at Scale on Azure 442

8.10 Data Backup on GCP 447

8.11 Data Backup on AWS 451

8.12 Data Backup on Azure 456

9.

Enabling Teams. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 461

9.1 Enabling Project Sharing on GCP 462

9.2 Enabling Account Sharing on AWS 465

Table of Contents | vii

9.3 Enabling Resource Group Sharing on Azure 468

9.4 Application Security Scanning on GCP 472

9.5 Application Security Scanning on AWS 475

9.6 Application Security Scanning on Azure 479

10. Security in the Future. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 483

10.1 The Infinite Game 484

10.2 Building Capability 484

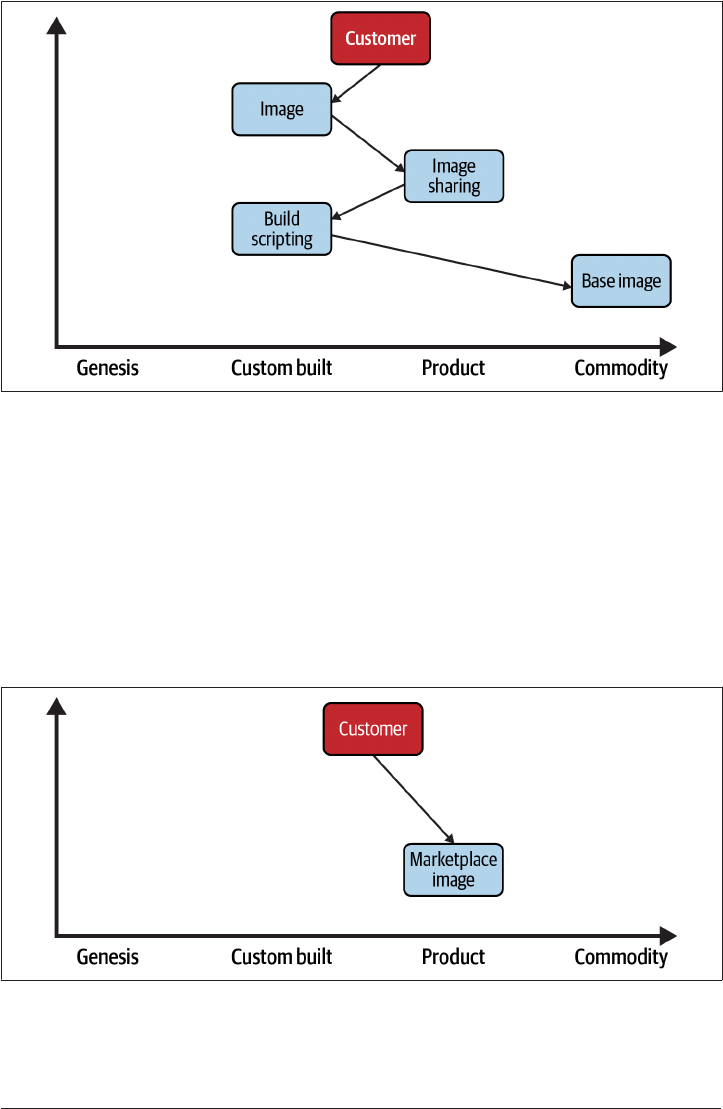

10.3 Building Situational Awareness 486

10.4 Conclusion 488

11.

Terraform Primer. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 489

11.1 Authenticating with GCP 490

11.2 Authenticating with AWS 490

11.3 Authenticating with Azure 490

Index. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 491

viii | Table of Contents

Preface

In the early 2000s, public cloud emerged as a new a paradigm that revolutionized

how technology is consumed across the world. Rather than waiting weeks to months

for new computers to be ordered, delivered, and racked, access to machines is mere

seconds away. The company bringing you one-click shopping was now bringing you

one-click computing.

In my first job out of university, I was working in the mainframe division of IBM,

building a cloud-delivered virtualized mainframe environment. We were looking to

bring DevOps to the world’s most venerated enterprises. What became a focus of the

customer conversations over time was security. How can they be assured that their

data is safe when they upload it to the cloud?

When the cloud was making waves in even the most risk-averse industries, I became

convinced that it was the way forward for businesses looking to excel in the digital

age.

Since IBM, I have spent the majority of my time working as a consultant in Australia

and the UK, focused on helping start-ups and enterprises alike use the cloud as their

innovation engine, empowering their developers to deliver exceptional outcomes.

Through my experience I have seen many false steps, patterns, and anti-patterns that

reappear as businesses and engineers make their first forays into a cloud-native

world. This book is focused on how to use the cloud securely.

The recipes in this book show you how to build a secure foundation in your cloud

environment and how to leverage the first-party security solutions to stay on top of

your estate as it organically scales.

Who This Book Is For

This book is about getting hands-on with the cloud. Each recipe comes with a fully

worked solution in Terraform, the most widely used infrastructure-as-code tool.

ix

For each problem presented, there is a recipe for each of the three primary cloud pro‐

viders, Amazon Web Services, Microsoft Azure, and Google Cloud Platform. As the

modern world is becoming increasingly multi-cloud, it is ever more valuable to be

cloud multilingual.

Although the three clouds have significant overlap in the services they provide, the

design decisions that went into the cloud itself have massive ramifications for secu‐

rity. With this book you’ll become empowered to translate between the three clouds

and rapidly solve problems wherever they present.

How This Book Is Organized

This book has nine chapters of recipes, each focused on a particular theme. These

range from how to structure your estate and manage users to how to ensure compli‐

ance at scale to the intricacies of identity and access management on each cloud pro‐

vider.

What You Need to Use This Book

In order to complete the recipes in this book, you will need access to your cloud of

choice. Depending on the recipe, you will need highly privileged credentials to make

the required changes.

All the recipes were developed using Terraform version 1.0. If you have not used Ter‐

raform before, then going through Chapter 11 will show you how to safely authenti‐

cate against your cloud.

Conventions Used in This Book

The following typographical conventions are used in this book:

Italic

Indicates new terms, URLs, email addresses, filenames, and file extensions.

Constant width

Used for program listings, as well as within paragraphs to refer to program ele‐

ments such as variable or function names, databases, data types, environment

variables, statements, and keywords.

Constant width bold

Shows commands or other text that should be typed literally by the user.

Constant width italic

Shows text that should be replaced with user-supplied values or by values deter‐

mined by context.

x | Preface

This element signifies a general note.

This element indicates a warning or caution.

Using Code Examples

Supplemental material (code examples, exercises, etc.) is available for download at

https://github.com/Armitagency/cloud-native-security-cookbook-tf.

If you have a technical question or a problem using the code examples, please send

email to bookquestions@oreilly.com.

This book is here to help you get your job done. In general, if example code is offered

with this book, you may use it in your programs and documentation. You do not

need to contact us for permission unless you’re reproducing a significant portion of

the code. For example, writing a program that uses several chunks of code from this

book does not require permission. Selling or distributing examples from O’Reilly

books does require permission. Answering a question by citing this book and quoting

example code does not require permission. Incorporating a significant amount of

example code from this book into your product’s documentation does require per‐

mission.

We appreciate, but generally do not require, attribution. An attribution usually

includes the title, author, publisher, and ISBN. For example: "Cloud Native Security

Cookbook by Josh Armitage (O’Reilly). Copyright 2022 Joshua Hagen Armitage,

978-1-098-10630-0.”

If you feel your use of code examples falls outside fair use or the permission given

above, feel free to contact us at permissions@oreilly.com.

O’Reilly Online Learning

For more than 40 years, O’Reilly Media has provided technol‐

ogy and business training, knowledge, and insight to help

companies succeed.

Preface | xi

Our unique network of experts and innovators share their knowledge and expertise

through books, articles, and our online learning platform. O’Reilly’s online learning

platform gives you on-demand access to live training courses, in-depth learning

paths, interactive coding environments, and a vast collection of text and video from

O’Reilly and 200+ other publishers. For more information, visit http://oreilly.com.

How to Contact Us

Please address comments and questions concerning this book to the publisher:

O’Reilly Media, Inc.

1005 Gravenstein Highway North

Sebastopol, CA 95472

800-998-9938 (in the United States or Canada)

707-829-0515 (international or local)

707-829-0104 (fax)

We have a webpage for this book, where we list errata, examples, and any additional

information. You can access this page at https://oreil.ly/cloudNativeCkbk.

Email bookquestions@oreilly.com to comment or ask technical questions about this

book.

For news and information about our books and courses, visit http://oreilly.com.

Find us on LinkedIn: https://linkedin.com/company/oreilly-media

Follow us on Twitter: http://twitter.com/oreillymedia

Watch us on YouTube: http://www.youtube.com/oreillymedia

Acknowledgments

This book stands upon the shoulders of other people’s ideas and knowledge. I am

indebted to the many people who have readily shared their expertise so that we can

explore further and higher rather than continually relearn the same lessons.

Above the main coworking space in my home city of Perth is the Greek proverb “A

society grows great when old men plant trees whose shade they know they shall never

sit in,” an ideal I try to hold close. I truly feel that everyone has valuable stories to

share. Wherever you are on your journey, there are people behind you or next to you

on their own journey who could benefit from your experience. This book is my

attempt to help people develop safer systems, protect their users, and have a more ful‐

filling and happier working life.

xii | Preface

Having spent a number of years consulting with the world’s biggest enterprises, I have

firsthand experience of both the pains and triumphs that come with digital and cloud

transformation, especially in the security domain. This book is a distillation of those

days in the trenches, with a bias for action that is imperative for real change to

happen.

Working with computers was almost preordained for me, as it seems the occupation

of choice for my family. My father and I both got jobs as mainframe developers

straight out of university about 30 years apart, much to his enjoyment when he

found out.

I started writing this book while in lockdown in the UK, attempting to find a project

to help keep me sane. I finished it in Australia just before my daughter was due to

arrive. I couldn’t have finished this book without the never-ending support of my

wife, Rebecca, who has had to deal with many late nights and weekends of me ham‐

mering the keyboard. In the end, the timing could not have worked out better as I

move on from this herculean labor to being a father.

Thank you to my triumvirate of tech reviewers who have challenged me and kept me

honest through the book, Marcus Maxwell, JK Gunnink, and Pete Yandell. Your

hours spent dissecting my writing has taken the book to a higher level and I am for‐

ever grateful.

To the amazing staff at O’Reilly, especially Corbin Collins for supporting me through‐

out this endeavor, Jennifer Pollock for giving me the chance to write this book, and

the production team, thank you for having the requisite patience and ensuring that

this book became a reality.

It’s hard to imagine this book existing were it not for the support of each and every

one of you.

Preface | xiii

CHAPTER 1

Security in the Modern Organization

In this chapter, you will learn the following:

• Why security is becoming ever more critical in the modern age

• What is meant by cloud native security

• Where security fits in the modern organization

• What the purpose of security is

•

What DevSecOps really is

• How to measure the impact of security

• The underlying principles of security

This foundation is critical for you to compellingly articulate why investment into

security is and will continue to be mandatory and how the advent of the cloud has

not invalidated the fundamental principles of security but has transformed how they

are applied.

1.1 Why Security Is Critical

Seeing as you’re reading this, you probably already believe in the criticality of secu‐

rity; however, it’s important to understand how security continues to be ever more

important day to day and year to year.

Life in the 21st century is digital first—our lives revolve around the internet and tech‐

nology. Everyone’s personal information is given to and stored by trusted third par‐

ties. We all believe that it will be handled safely and securely. What recent history has

shown us, however, is that security breaches are the norm; they are to be expected.

1

This information is the gold filling the 21st-century bank vaults of technology titans.

Where you have concentrations of wealth, you must scale your security to match.

Human instinct makes us believe that to go slowly is to go safely, which often mani‐

fests as lengthy security assessments, full multiweek penetration tests on every release,

and security being the slowest moving part on the path to production.

This is actively harmful in two ways. First, the systems that businesses operate are

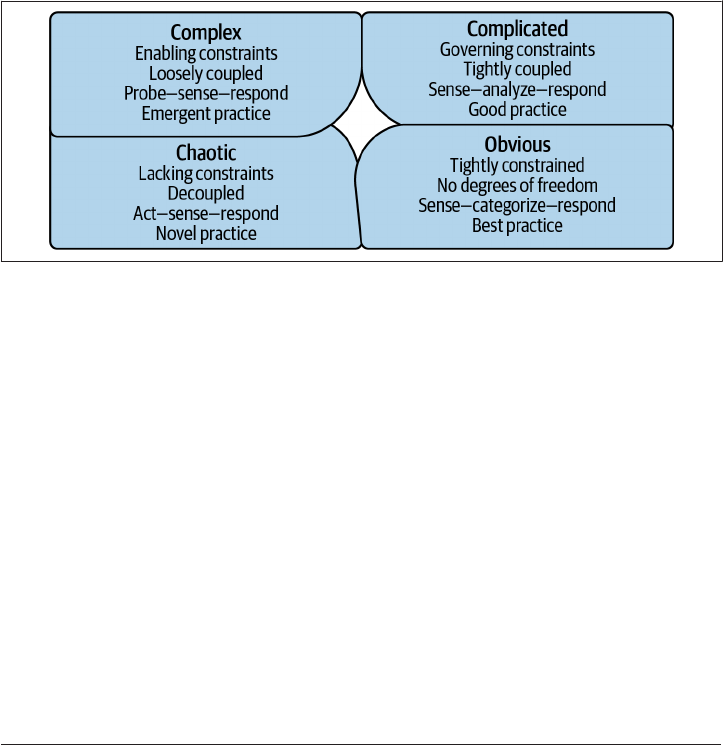

inherently complex. Complexity theory and other models of complexity, such as the

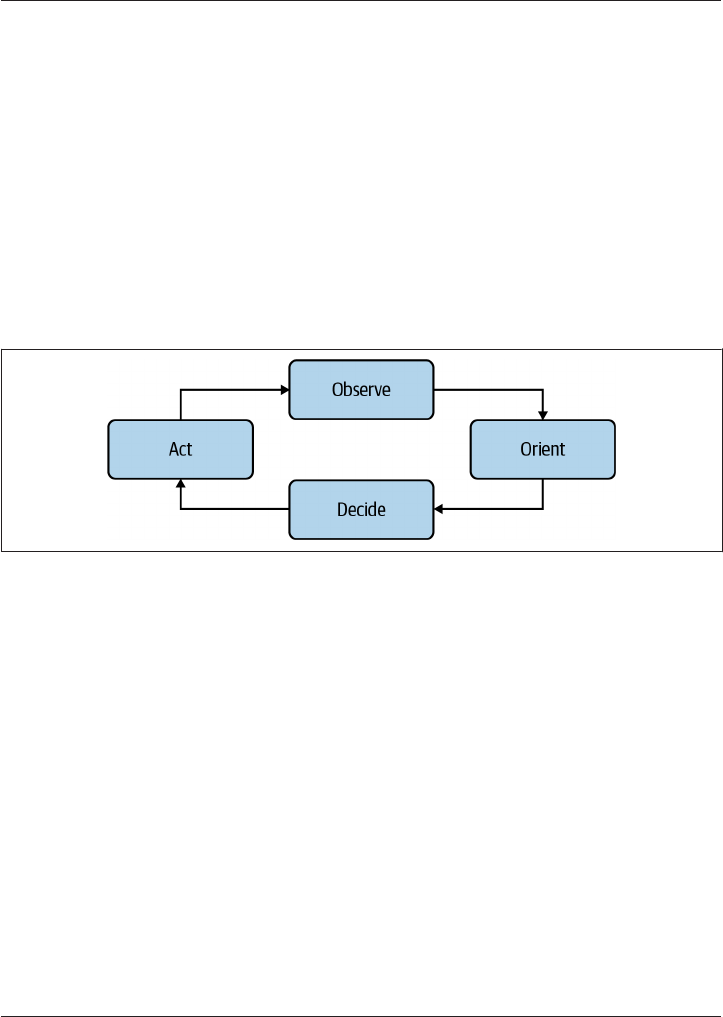

Cynefin framework, shown in Figure 1-1, teach us that it is impossible to think our

way through a complex system. No amount of reading code and looking at architec‐

ture diagrams can allow you to fully understand all the possibilities and potential vul‐

nerabilities within a system. Being able to react and apply fixes quickly when issues

are discovered, such as the Log4j vulnerability in December 2021, results in a supe‐

rior security posture when compared to lengthy, time-intensive review cycles.

Figure 1-1. Cynen framework

But even if it were possible with sufficient time to root out all security vulnerabilities,

for a business, moving slowly in the 21st century is a recipe for disaster. The level of

expectation set by the Googles, Microsofts, and Amazons of the world has laid down

a gauntlet. Move fast or die. Security teams are caught between two unstoppable

forces: the business imperative for agility through speed and the exponential growth

in breach impacts.

When a breach happens, the business suffers in a number of ways, to name but a few:

• Reputational damage

• Legal liabilities

• Fines and other financial penalties

• Operational instability and loss of revenue

•

Loss of opportunity

2 | Chapter 1: Security in the Modern Organization

The vast majority of businesses are either already in the cloud or are exploring how

they can migrate their estates. With the near ubiquity of cloud infrastructure, both

governments and regulators are investing significantly in their understanding of how

companies are using the cloud. Examples such as the General Data Protection Regu‐

lation (GDPR) and the California Consumer Privacy Act are just the tip of a wave of

increased security expectations, controls, and scrutiny. Over time, the damage suf‐

fered by a business from a breach will exponentially and catastrophically increase.

Our principles of security are not invalidated by this new reality, but how they are

applied, embedded, and upheld needs to fundamentally transform.

1.2 What Is Meant by Cloud Native Security?

A common trope of the technology industry is that definitions become loose over

time. In this book, cloud native is defined as leveraging technology purpose-built to

unlock the value of, and accelerate your adoption of, the cloud. Here is a list of common

properties of cloud native solutions:

•

It is automation friendly and should be fully configurable through infrastructure

as code (IaC).

• It does not place unnecessary, artificial constraints on your architecture. For

example, per machine pricing is not considered a cloud native pricing model.

•

It elastically scales. As your systems and applications grow in size, the solution

scales in lockstep.

• It natively supports the higher-level compute offerings on the cloud platforms. It

should support serverless and containerized workloads, as well as the plethora of

managed service offerings.

In this book, where possible, the recipes use the managed services provided by the

cloud platforms themselves. They have all the previous properties, are purpose-built

to support customers in their cloud journey, and are easily integrated into your estate.

IT security has existed from the day there was something of value stored on a com‐

puter. As soon as things of value were stored on computers, it was necessary to

defend them. As an industry, IT has proven the ability to undergo seismic shifts with

frightening regularity; embracing cloud native is simply the most recent. As more

value is poured into technology and systems, the potential value to be gained by

attacking them increases, therefore our security must increase in kind. The cloud can

bring so much good, but with it comes new challenges that will need cloud native

people, processes, and tools to overcome.

1.2 What Is Meant by Cloud Native Security? | 3

The Beginnings of the Cloud

Back in 2006, Amazon first announced Amazon Web Services (AWS), offering pay-

as-you-go technology to businesses. Over the intervening 15 years, a tectonic shift

fundamentally altered how companies approach technology. Historically, businesses

ordered and managed hardware themselves, investing huge sums of capital up front

and employing large teams to perform “undifferentiated heavy lifting” to operate this

infrastructure. What Amazon started, followed in 2008 by Google and 2010 by

Microsoft, allowed businesses to provision new infrastructure on demand in seconds,

as opposed to waiting months for the hardware to arrive and be racked, configured,

and released for use.

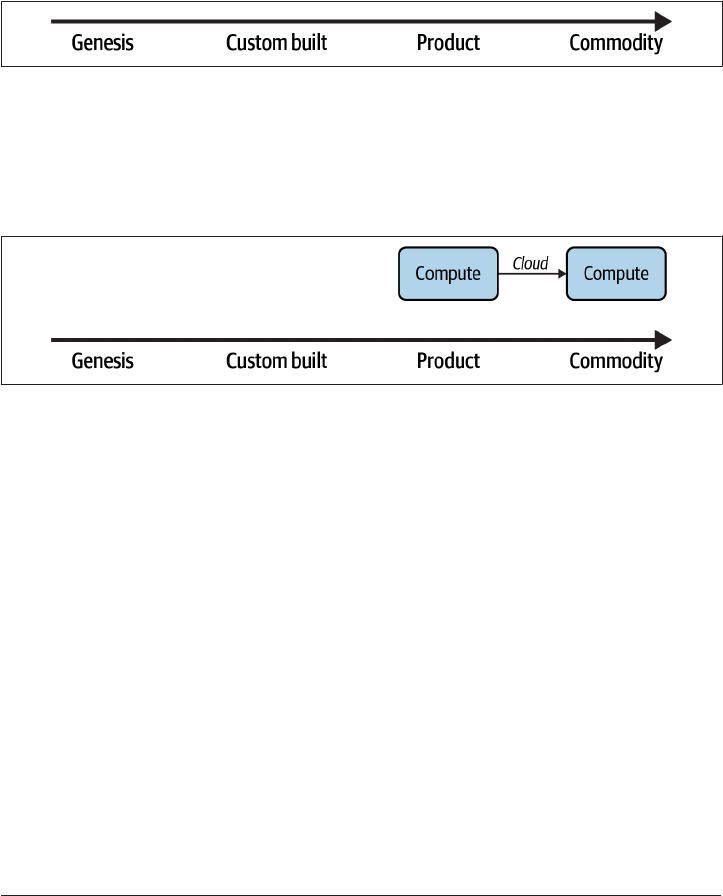

Infrastructure became a commodity, like water or electricity. This enabled businesses

to rapidly experiment, become more agile, and see technology as a business differen‐

tiator rather than a cost center. Over time, the cornucopia of services offered by the

Cloud Service Providers (CSPs) has grown to encompass almost everything a busi‐

ness could need, with more being released every day. Nearly every company on the

planet, including the most ancient of enterprises, is cloud first. The cloud is here to

stay and will fundamentally define how businesses consume technology in the future.

Old Practices in the New Reality

When something as transformational as cloud computing occurs, best practices

require time to emerge. In the intervening gap, old practices are applied to the new

reality. The security tools and processes which served us well in the pre-cloud age

were not built to contend with the new normal. The pace of change posed by the

cloud presented new security challenges the industry was not equipped to face.

Through effort, time, and experimentation, it is now understood how to achieve our

security objectives by working with, not against, the cloud. You can now have cloud

native security.

Cloud native security is built around the following fundamental advantages of cloud

computing:

Pay for consumption

In a cloud native world, expect to only pay for services as you use them, not for

idle time.

Economies of scale

As the CSP is at hyperscale, it can achieve things which cannot be done in isola‐

tion, including lower pricing, operational excellence, and superior security

postures.

4 | Chapter 1: Security in the Modern Organization

No capacity planning

Cloud resources are made to be elastic; they can scale up and down based on

demand rather than having to go through the effort-intensive and often inaccu‐

rate process of capacity planning.

Unlock speed and agility

By allowing companies and teams to rapidly experiment, change their mind, and

move quickly, the cloud allows for capturing business value that would be impos‐

sible otherwise.

Stop spending money on

“undierentiated heavy liing”

Rather than focus on activities that cannot differentiate you from your competi‐

tion, allow the CSP to focus on those tasks while you focus on core competencies.

Span the globe

The CSP allows businesses to scale geographically on demand by having loca‐

tions all over the world that operate identically

When you look at the processes and tools that constitute cloud native security, you

enable the consumption and promised benefits of the cloud, not constrain them.

1.3 Where Security Fits in the Modern Organization

Historically, security has operated as a gatekeeper, often as part of change advisory

boards (CABs), acting as judge, jury, and executioner for system changes. This siloed

approach can only take you so far. The waste incurred by long feedback loops, long

lead times, and slow pace of change is incompatible with a digital-first reality.

By looking to block rather than enable change, the security and delivery teams are

forced into a state of eternal conflict, creating friction that breeds animosity and pre‐

vents the business from achieving its goals. Team Topologies, by Matthew Skelton and

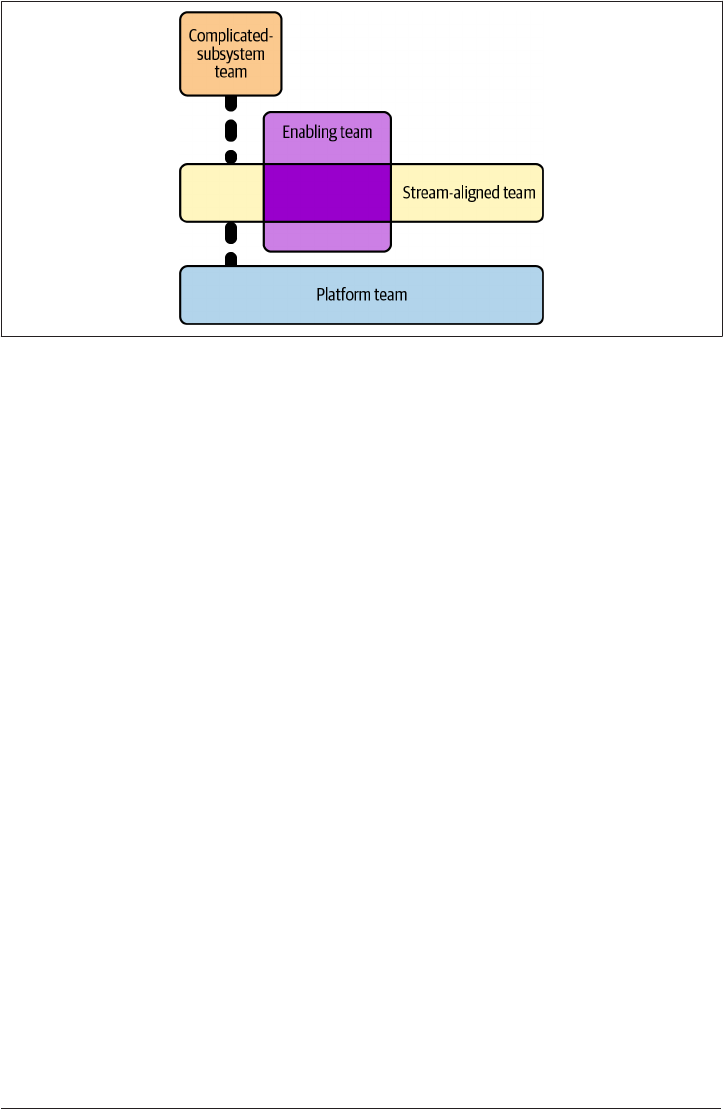

Manual Pais (IT Revolution Press), examines the four team archetypes that are fun‐

damental to exceptional technology performance: enabling teams, platform teams,

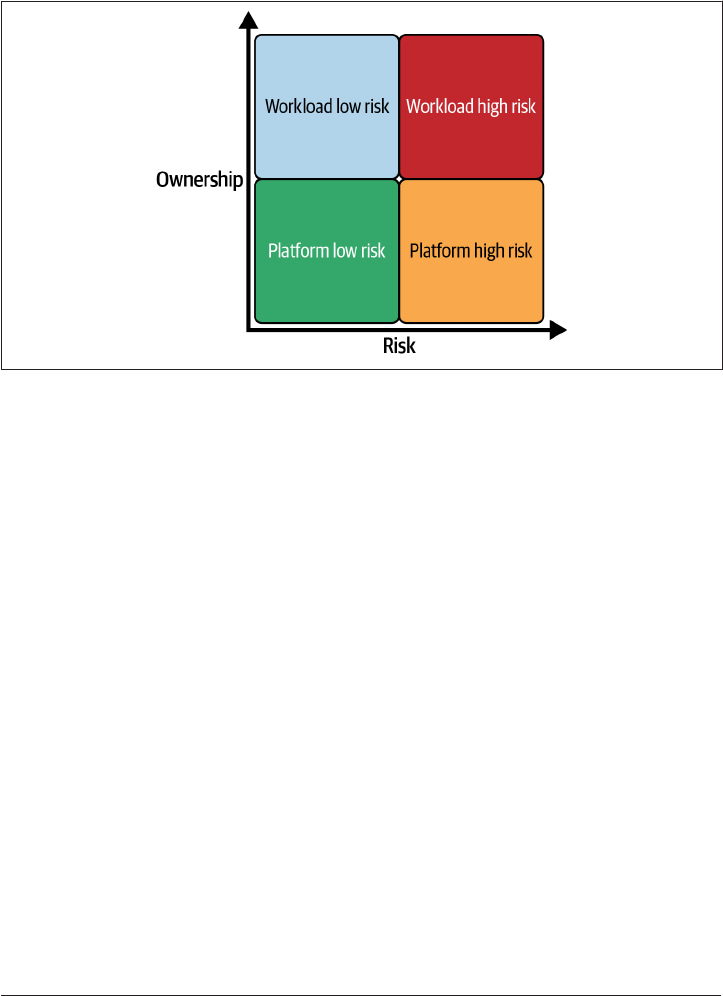

complicated-subsystem teams, and stream-aligned teams, as shown in Figure 1-2.

1.3 Where Security Fits in the Modern Organization | 5

Figure 1-2. Team topologies

Stream-aligned teams are how the business directly delivers value. They build the sys‐

tems and services that allow the business to function, interact with customers, and

compete in the market.

Complicated-subsystem teams look after systems that require their own deep domain

expertise, such as a risk calculation system in an insurance company.

Platform teams produce compelling internal products that accelerate stream-aligned

teams, such as an opinionated cloud platform, which is the focus of Chapter 2.

Enablement teams are domain specialists who look to impart their understanding of

other teams within the business.

Simply put, all other teams are there to enable the stream-aligned team. Security

needs to operate as an enablement team; i.e., they are domain experts that actively

collaborate with other teams. It is unrealistic and infeasible to expect that all engi‐

neers become security experts throughout a company, although it is not unrealistic or

infeasible to expect and embed a base level of security competency in all engineers.

Even in a highly automated world, developing systems is knowledge work—it is peo‐

ple who determine the success of your security initiatives.

It is through this enablement lens that many of the recipes in this cookbook make the

most sense. Through working with enterprises around the world, I have seen that the

paradigm shift from gatekeeper to enabler can be difficult to undertake; the animosity

and lack of trust between security and delivery built over many years are powerful

inhibitors of change. However, to take full advantage of cloud native security, this

shift must happen, or misaligned incentives will scupper any and all progress.

6 | Chapter 1: Security in the Modern Organization

1.4 The Purpose of Modern Security

Security operates in the domain of risk. Perfect security is not a realistic or achievable

goal; at any one time, you cannot provide services and be known to be immune to all

threats. This reality is even borne out in how fines are handed out following breaches:

a substantial percentage of the fine is negated if reasonable attempts have been made

to prevent the breach. So, if you cannot achieve complete security, then what is your

north star? What is your goal?

At the macro level, your goal is to make commercially reasonable efforts to minimize

the chance of security incidents. What is deemed commercially reasonable varies

wildly among companies. Often, startups have a significantly higher risk tolerance for

security than regulated enterprises, as common sense would lead us to predict. What

is important to keep in mind is that this much lower risk tolerance does not mean

that an enterprise cannot move as fast as a startup due to overbearing security con‐

cerns. Throughout this book you will see how, with the correct principles and recipes

in place, you do not handicap your stream-aligned teams.

At the micro level, your goal is to ensure that a single change does not present an

intolerable amount of risk. Again, what is tolerable is highly context specific, but

thankfully, techniques to minimize risk are often universal. Later in this chapter, as I

discuss DevSecOps, I will drill into what properties of changes allow you to minimize

the risk and how embracing a DevSecOps culture is required for aligning teams

around security objectives.

1.5 DevSecOps

Before I can dive into what DevSecOps is, you first need to understand its precursor,

DevOps.

What Is DevOps?

At its heart, DevOps is a cultural transformation of software delivery. It is heavily

influenced by lean theory and is most simply described as bringing together what his‐

torically were two disparate silos, development and operations, hence DevOps, or the

commonly used soundbite, “You build it, you run it.”

To put it into numbers, elite teams operating in a DevOps model do the following:

•

deploy code 208 times more frequently

• deploy code 106 times faster

• recover from incidents 2,604 times faster

•

make 1/7 the amount of changes that fail

1.4 The Purpose of Modern Security | 7

As you can see from the numbers, DevOps was revolutionary, not evolutionary, and

DevSecOps has the same potential.

Understanding these numbers is crucial for modern security as it allows for align‐

ment around a common set of constraints—security objectives need to be achieved

without preventing teams from becoming elite performers. Being elite for lead time

means that changes are in production within an hour, meaning that mandatory secu‐

rity tests that take a day to complete are incompatible with the future direction of the

company. A classic example of this in the enterprise is a mandatory penetration test

before every release; although the goal of the penetration test is valuable, the activity

itself and its place in the process need to change. The increasingly popular approach

of bug bounties is a potential replacement for penetration tests. These challenges that

security teams are now facing are the same ones that operations teams faced at the

birth of DevOps in the early 2000s.

It’s crucial to set the context, as it leads to the right conversations, ideas, problems,

and solutions to achieve the right outcomes. As you can see, the engineering and cul‐

tural principles needed to allow companies to merely survive today forces wide-scale

changes in security, the reality of which is what the industry calls DevSecOps.

Two of the seminal texts in DevOps,

e Phoenix Project (by Gene Kim et al., IT Rev‐

olution Press) and e Unicorn Project (by Gene Kim, IT Revolution Press), elaborate

“the Three Ways” and “the Five Ideals” as underlying principles. I’ll examine them

briefly here as they also underpin DevSecOps.

The Three Ways

These are the Three Ways:

Flow and Systems inking

The first way tells us that you need to optimize for the whole system, not simply

for one component. Local optimization can often come at the expense of the sys‐

tem as a whole, which leads us to the realization that the most secure system is

not necessarily in the best interests of the business. Delaying a critical feature

because of a vulnerability is a trade-off that needs to be made on a case-by-case

basis.

Amplify Feedback Loops

The second way tells us that feedbacks loops are the mechanisms that allow for

correction; the shorter they are, the faster you can correct. This leads us to the

potential value of the short-term embedding of security specialists in teams, and

also adopting tooling that allows for rapid feedback on changes, such as in IDE

SAST tooling.

8 | Chapter 1: Security in the Modern Organization

Culture of Continual Experimentation and Learning

The third way is how you need to embrace risk, and only by taking and learning

from risks can you achieve mastery. This leads us to the realization that the tech‐

nology domain is moving forward ever more rapidly, and you need to move with

it, not fight against it. Dogma leads to ruin.

The Five Ideals

These are the Five Ideals:

Locality and Simplicity

The first ideal around locality means that you should enable autonomous teams;

changes should be able to happen without approval from many people and

teams. Teams should own their entire value stream, which is a significant shift

from the siloed approach of the past, where handoffs reduced accountability and

added waste.

Focus, Flow, and Joy

The second ideal means that you should be looking to enable teams to focus on

their objectives and find flow, as working in a state of flow brings joy. Rather than

getting in each other’s way and working in the gatekeeper functions of the past,

you need to find how you can help people and teams achieve flow and make the

passage of work easy and enjoyable.

Improvement of Daily Work

Historically, the rush for features has drowned systems and teams in seas of tech‐

nical debt. Instead, you need to be mindful and enable teams to pay down their

technical debt. There may be systems that are in need of decommissioning, sys‐

tems that have started to struggle to scale, or decisions that have proved less than

optimal over time.

Psychological Safety

People should feel secure and safe to express themselves and should not be

blamed for mistakes, which instead are used as opportunities for learning.

Through rigorous and meticulous study, Google found that psychological safety

is one of the key properties of high-performing teams.

Customer Focus

Systems fall into one of two categories: core and context. Core generates a dura‐

ble competitive advantage for the business; context is everything else. Security for

most businesses is context; it exists to enable core but is not core itself, as it is not

generally a source of competitive advantage. This is shown by the fact that secu‐

rity operates as an enablement team and is there to support core in delivering the

greatest value.

1.5 DevSecOps | 9

What Is DevSecOps?

DevSecOps is the natural extension of DevOps into the security domain. You are now

charged with a goal of enabling business agility securely. Within that shift comes peo‐

ple, process, and tool changes, but it is important to understand that it is, at its core, a

shift in culture. Simply replacing tools in isolation will not allow you to thrive in the

new reality, no matter what the vendor might say.

As I said previously, security operates in the domain of risk. As part of the approval

and testing processes, for a change, you are trying to build confidence that you are

not introducing a large amount of risk. This is analogous to functional testing of soft‐

ware: you cannot prove the nonexistence of bugs, but you can pass a confidence

threshold, meaning that you can release into production. Proving that a change con‐

tains no security issues is impossible; being confident that a major issue is not intro‐

duced is possible. This brings us to the following two properties of a change that

impact risk:

Size of the change

Size is the most critical property of a change to consider when looking at risk.

The more you change, the more risk is involved. This is something that is hard to

definitively measure, as most things in security unfortunately are. But as a base,

heuristic lines of code are effective more often than not. You want many small

changes as opposed to fewer large ones. This allows you to more easily under‐

stand which change caused an adverse impact and to more effectively peer-

review the change, and it means that one bad change does not block all other

changes.

Lead time for changes

Based on our shared understanding that changes with security vulnerabilities are

inevitable, the speed with which you can resolve introduced issues becomes cru‐

cial. The total risk posed by a change is capped by the length of time it is exposed

and live. When an issue is discovered in production, the lower the lead time, the

less the exposure. In reality, the teams that pose the greatest challenge when first

embarking on DevSecOps, the pioneers moving the fastest, have the highest

potential for security. The days of “move fast and break things” are behind us;

today’s mantra is “Better Value, Sooner, Safer, Happier”.

Resolving issues with roll forward versus roll back

Upon discovery of an issue, in an ideal world you want to roll forward—introduce a

new change to resolve the issue—rather than roll back and revert all changes. An

operationally mature team has more options—the same processes and tools that

allow them to deploy many times a day give them a scalpel to target and resolve

issues. Teams early in their DevOps journey often only have sledgehammers, mean‐

ing that the business impact of resolving an issue is much worse.

10 | Chapter 1: Security in the Modern Organization

Continuous integration and continuous delivery

Continuous integration (CI) and continuous delivery (CD) are two foundational pat‐

terns that enable DevOps; they are how system change happens. Teams possess a

CI/CD pipeline which takes their code changes and applies them to environments.

Security teams possessing their own pipelines can rapidly enact change, while hook‐

ing into all pipelines in the organization allows them to enact change at scale.

Before I discuss what exactly continuous integration and continuous delivery are, let’s

segue briefly into how code is stored.

Version Control

Version control is the process of maintaining many versions of code in parallel. There

is a base branch, often called trunk or main, which has a full history of every change

that has ever happened. When a team member wishes to make a change, they make a

new branch, make their changes independently, and merge them back into the base

branch.

Companies will have at least one version control system they use, most commonly

GitHub, GitLab, or BitBucket. Becoming familiar with how version control operates

is a required skill for the modern security engineer.

What is continuous integration?

Continuous integration is the practice of regularly testing, at least daily, against the

base branch. Its primary purpose is to check that the proposed changes are compati‐

ble with the common code. Often, a variety of checks and tests are run against the

code before it is allowed to be merged back into the base branch, including a human

peer review.

As this process allows for barring changes, you can embed security tooling that ana‐

lyzes code and dependencies to stop known issues from being merged and ever

reaching a live environment.

What is continuous delivery?

Continuous delivery is the practice of having the common code maintained in a

deployable state; i.e., the team is able to perform a production release at any time. The

intent is to make releasing a business decision rather than a technical one. As the

code exists to fulfill a business need, it makes sense for this decision to be purely busi‐

ness driven.

This approach runs in opposition to significant human oversight on changes. A

mandatory human-operated penetration test before release means that continuous

delivery cannot be achieved, and the business loses agility as its ability to react is

constrained.

1.5 DevSecOps | 11

What is continuous deployment?

Continuous delivery and deployment are often confused, as they are very closely

related. Continuous deployment is the practice of performing an automated produc‐

tion release when new code is merged into the shared common code. By building the

apparatus around this, teams can be elite and release tens to hundreds of times a day.

The level of automation required shifts almost 100% of quality control onto tooling,

with the sole human interaction being the peer review. Teams reaching for this goal

introduces a need for a mature, fully automated DevSecOps tool chain.

CI/CD pipelines

As mentioned previously, teams possess CI/CDs, which is how change is applied to

environments. These pipelines are the only way to make production changes, and

provide the vector for embedding practices across all teams in an organization. As

long as you can automate something, it can become part of a pipeline and can be run

against every change as it makes its way to production and even after. Pipelines

become the bedrock for the technical aspects of the DevSecOps cultural shift.

Want to start running dependency checks of imported packages? Embed it into the

pipeline. Want to run static code analysis before allowing the code to be merged?

Embed it into the pipeline. Want to check infrastructure configuration before it’s live

in the cloud? Embed it into the pipeline.

Additionally, these pipelines operate as information radiators. As all change goes

through them, they become the obvious choice for where to surface information

from. As I am now broaching the topic of measuring the impact of security, many of

the metrics are observed from the pipelines themselves.

1.6 How to Measure the Impact of Security

I often find myself quoting Peter Drucker: “What gets measured, gets managed.” With

that in mind, how can you tackle measuring the impact of security? This has often

proved to be a vexing question for many chief information security officers (CISOs),

as security is only ever top of mind when something has gone wrong. While I do not

believe I have the one true answer, let’s discuss some ideas and heuristics that are

often used.

Time to Notify for Known Vulnerabilities

As modern systems are built on the shoulders of giants—i.e., software is built

depending on frameworks and libraries from innumerable developers and companies

—we need an ability to notify teams when one of their dependencies is known to

have a potential vulnerability.

12 | Chapter 1: Security in the Modern Organization

For example, I’m building a serverless function in Python, and I have used the latest

version of a library. Two days after that code is deployed into production, a vulnera‐

bility is identified and raised against the library. How long a wait is acceptable before

my team is notified of the vulnerability?

Time to Fix a Known Vulnerability

Coupled to the notification time, what is an acceptable wait time for the vulnerability

to be fixed? Fixing in this context can take a few different guises, the simplest being

deploying a new version of the function with a patched, updated library; slightly

more complicated is the decommissioning of the function until a patch is available,

and potentially most complicated is self-authoring the library fix.

The selection of the solution is context specific, but the metric will help drive matur‐

ity and will produce concrete examples around what risk is truly tolerable from the

business.

Service Impacts Incurred Through Security Vulnerabilities

Often the quickest way to close a potential security threat is to turn something off,

whether literally flicking a switch or making something inaccessible. As an organiza‐

tion operationally matures, the service impact of fixing a security issue should be neg‐

ligible. As talked about previously, you want to roll forward fixes, thereby not impact‐

ing service availability, but there will be cases along the journey where it is better to

place the service in a degraded state while the fix is applied. Improvements in this

metric are correlated with increased operational maturity.

Attempted Breaches Prevented

Modern tooling is sophisticated enough to identify breaches being attempted or ret‐

roactively identify past breach attempts. To make the impact of investment in security

more tangible, understanding how many potential incidents have been prevented is a

powerful metric to obtain. It is important, however, that there is nuance in the meas‐

urement. Being able to drill down to the component level is crucial; for example,

reducing your attack surface by decommissioning infrastructure will make the aggre‐

gate count decrease but could be misconstrued as a loss in tooling efficacy, or it could

simply be that fewer attempts are being made.

Compliance Statistics

Having a robust set of controls that define compliant cloud resource configurations is

crucial in a scalable security approach, as you will see in greater detail later in the

book. For now, consider an AWS organization with hundreds of S3 buckets spread

across tens of accounts—you should be able to track and report on how many of

1.6 How to Measure the Impact of Security | 13

them have sufficient levels of server-side encryption enabled. By tracking this metric

across many resource types and configuration options, you can understand our base‐

line security posture at scale and show the impact of security initiatives.

Percentage of Changes Rejected

As part of a security team’s enablement objective, over time you need to determine

efficacy. Over time, teams should understand the security context they operate

within, and doing things securely should be the default. A metaphor I like for this is

that developers are like lightning—they pursue the path of least resistance. If you can

make the secure path the one of least resistance, you will observe the percentage of

changes rejected on security grounds decrease over time.

1.7 The Principles of Security

By establishing principles, a common set of beliefs, and embedding them through

action, you make significant progress on two pivotal goals. First, you strengthen a

culture that takes security seriously. Second, you build the foundations for autonomy.

Fundamentally, scaling is achieved by giving people the tools and mental models

required to make the correct decisions. It is not enough for people to be able to parrot

answers back; they need to be able to arrive at the same answer independently. To that

end, let’s look at a starting set of principles.

Least Privilege

Often the first principle that comes to mind when discussing security, the principle of

least privilege is that actors in the system, both human and robot, have enough privi‐

lege to perform their jobs and no more. For example, a human cannot make changes

to production environments without using the CI/CD pipeline, or a system cannot

provision infrastructure in regions that are not needed for the application.

Currently this is hard to achieve and maintain. As I have already discussed, the busi‐

ness needs to be agile, which means that the scope of permissions someone requires

can rapidly change. The most common issue I’ve seen is that although getting exten‐

ded permissions is normally streamlined and fairly trivial, permissions are rarely

revoked or challenged. Often what was least privileged in the past is now overly privi‐

leged due to a decrease in scope. We’ll evaluate recipes later in the book around both

the initial creation of permission sets and their ongoing maintenance.

Only as Strong as Your Weakest Link

Your security posture is not determined by your strongest point but by your weakest.

Having a castle doesn’t help keep you safe if you leave the gate unlocked and open.

14 | Chapter 1: Security in the Modern Organization

When you look at how to implement cloud native security, you need to make sure

you’re focusing on the weak points, not reinforcing areas of strength.

There’s no value in investing significant time in finely tuned identity and access man‐

agement (IAM) policies, if users are not ubiquitously using multifactor authentication

(MFA).

Defense in Depth

This principle is closely related to the concept of weakest links. To have a robust secu‐

rity posture, you need layered solutions. For example, company systems are often

only accessible over a virtual private network (VPN), and the intent is that the VPN is

only accessible by authenticated users; however, you should not implicitly trust that

all users coming from the VPN address space have been authenticated. Otherwise, a

compromise in one system cascades, and the potential impact of a breach is magni‐

fied.

Another example is when designing networking, as discussed in Chapter 5. Applica‐

tions in the cloud have distinct identities that define access, beneath that should be

additional firewall rules that use IP address ranges to allow groups of systems to com‐

municate, and beneath that are the routes which dictate where traffic can flow. These

combine to iteratively reduce the blast radius of a breach: a compromise in one layer

does not completely negate all controls.

Security Is Job Zero

A phrase initially coined by Amazon, this principle speaks to how security is the first

step in the process. Although I have already discussed how not everyone can be secu‐

rity experts, everyone must become security conscious, literate, and cognizant. Allow‐

ing people to look through a security lens is the most critical aspect of a security

team, which we’ll discuss as part of the enablement strategies in Chapter 4.

Culturally, security has to be the basis from which both technical and social systems

are built. An insecure foundation will undermine anything built on top of it. Bad

password management undoes password complexity requirements. Unencrypted

traffic can make encryption at rest pointless.

You can’t out-engineer a culture that doesn’t value security at its core.

Quality Is Built In

This principle goes hand in hand with security as job zero. Historically, security was a

“bolt-on”—once the functionality was built, it was then made secure, to varying levels

of efficacy. In a world centered around the need for business agility, it is hard to see

how this “bolt-on” approach, even if it was effective in preventing incidents, allowed

teams to be agile and effective. Security is an aspect of system quality. In the

1.7 The Principles of Security | 15

preceding principle, it is the alpha quality; without a secure foundation the change

should never see the light of day. Code, architectures, and systems need to be

designed to allow for security, meaning that security is something that needs to be

prioritized and invested in from day one.

Businesses can often be myopic in the pursuit of new functionality, and under pres‐

sure to hit release dates, security is often deprioritized. This technical debt accrues

over time and becomes incredibly expensive to pay back, orders of magnitude more

than was initially required to build in at the start.

DevSecOps initiatives, tooling, and processes like threat modeling make security a

first-class initiative from before a line of code is written. By enforcing security stand‐

ards from the beginning, it is no longer work that can be dropped when there is

schedule pressure. It’s part of the standard operating procedure.

Chapter Summary

Let’s review the learning objectives.

Modern life means that ever more value is being created digitally, and with that come

more incentives for cyber criminals and worse damages as regulation increases. As

the attacks grow in sophistication, so must our defenses. Cloud native security is

security principles applied in true symbiosis with the cloud, ensuring that you are

building fit-for-purpose processes, using the right tools, and making sure our people

understand the new reality.

Security is an enablement function in a modern organization, as opposed to the gate‐

keeper position it often previously occupied. It needs to allow for change to flow

quickly, easily, and safely. Security exists to manage risk, both at the macro and micro

levels. Risk is introduced through change, so being able to understand change at scale

is critical in managing risk. Smaller, more frequent change is far less risky than bigger,

less frequent change.

DevSecOps is a cultural shift that transforms how security works in concert with

delivery teams. You cannot achieve DevSecOps by buying a new tool; instead, it is a

deep-rooted change that starts and ends with people. I talked about a few quantitative

measures that could be used together to understand how security is maturing at your

organization, such as the percentage of compliant infrastructure, the speed with

which the issues are rectified, and the number of potential breaches negated.

The fundamental principles of security have not changed in decades; instead it is

their application that has changed. From least privilege to defense in depth, under‐

standing these principles enables you to form a security strategy and understand how

the recipes in this book stem from a strong, principled foundation.

16 | Chapter 1: Security in the Modern Organization

With the introduction done, we’ll now look at the recipes that allow you to establish a

solid foundation in the cloud. As with a shaky foundation, everything built on top

will quickly come crashing down around you.

1.7 The Principles of Security | 17

CHAPTER 2

Setting Up Accounts and Users

In Chapter 1, you saw the principles that underly modern security and, specifically,

how security functions as cloud native becomes the dominant paradigm in the indus‐

try. Now, let’s move on to the initial configuration of your cloud estate. Investing in a

scalable, programmatic approach will let you move with the business, allowing teams

to rapidly onboard and innovate and allowing you to focus your energies and efforts

on higher-value activities.

You’ll see how to do the following:

• Create the fundamental building blocks of cloud estates

• Deploy a region-based guardrail to constrain teams to only approved regions

• Centralize and create users to allow people to access your estate in a manageable

way

2.1 Scalable Project Structures on GCP

Problem

You need to deploy the projects required to rapidly onboard a new team in to your

organization.

Solution

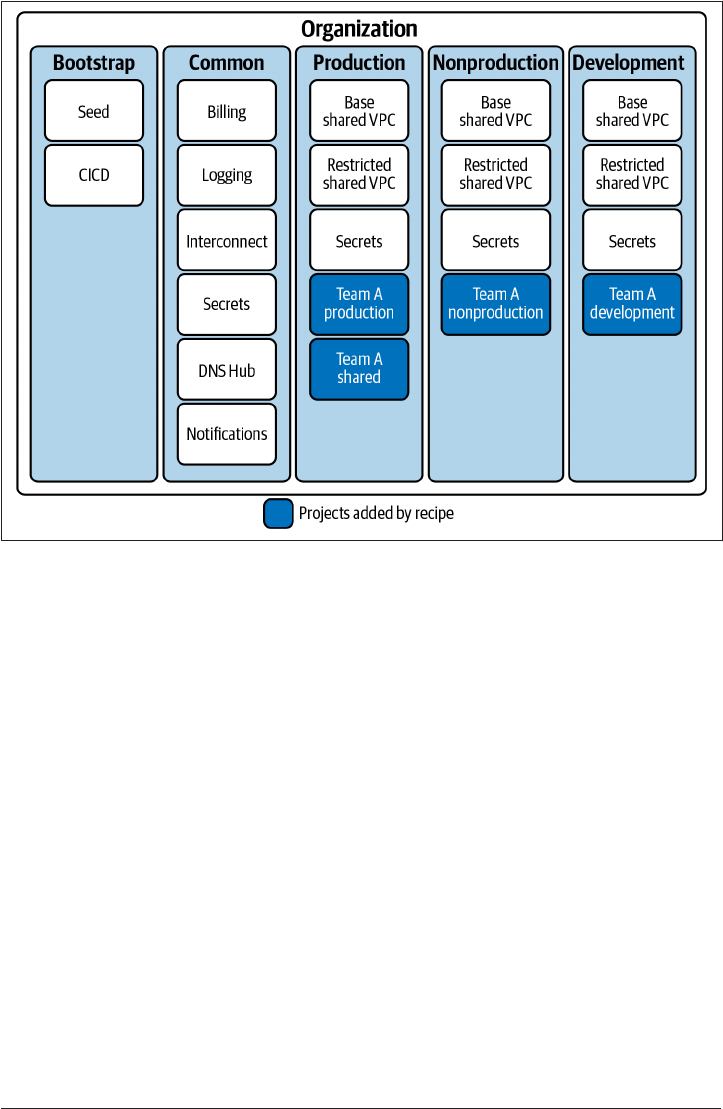

In this recipe, you will deploy a new folder for a team, with the four required projects

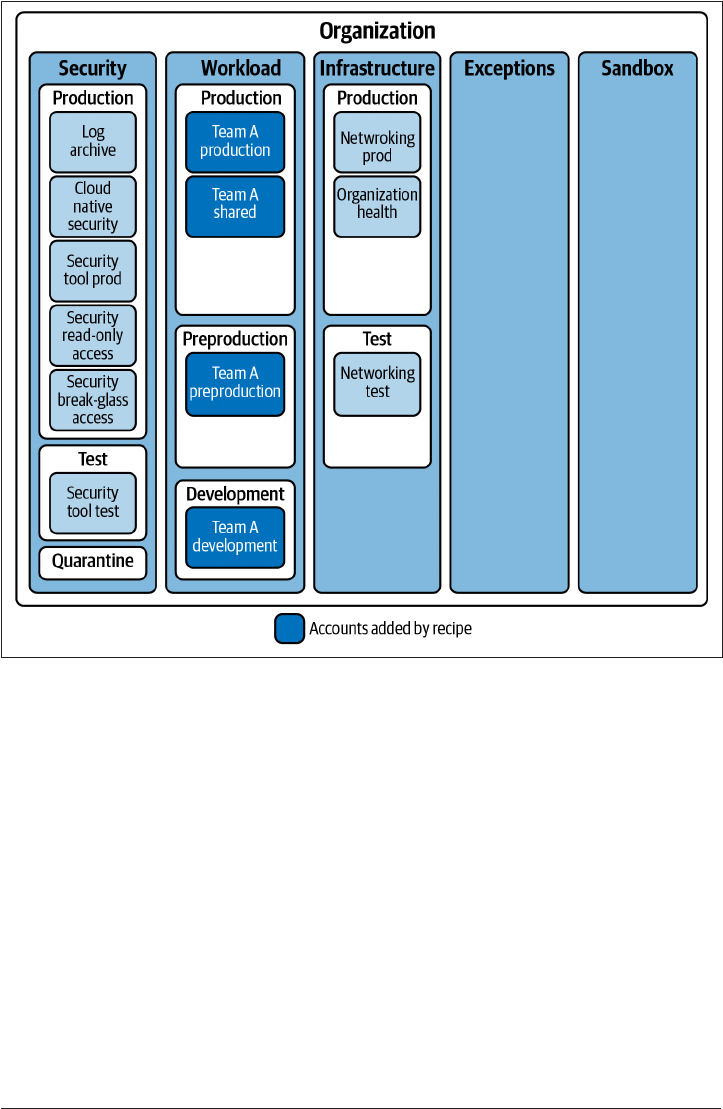

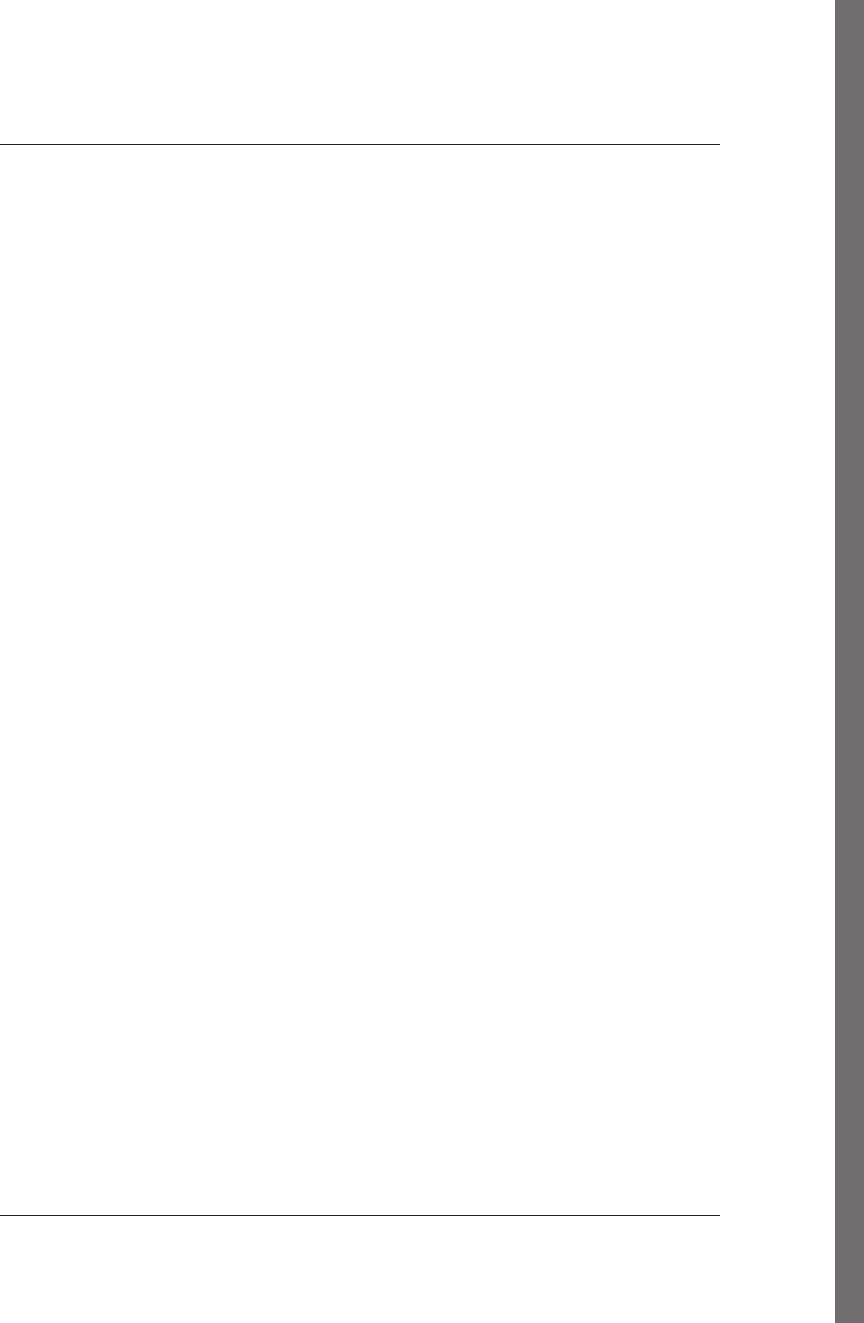

underneath, as shown in Figure 2-1.

19

Figure 2-1. Recommended organization structure

If you haven’t already done so, familiarize yourself with Terraform and the different

authentication mechanisms in Chapter 11.

Create a variables.tf file and copy the following contents:

variable "production_folder_name" {

type = string

description = "The name of the production folder"

}

variable "nonproduction_folder_name" {

type = string

description = "The name of the nonproduction folder"

}

variable "development_folder_name" {

type = string

description = "The name of the development folder"

}

variable "project_prefix" {

type = string

description = "Used to prefix the project names to ensure global uniqueness"

20 | Chapter 2: Setting Up Accounts and Users

}

variable "team_name" {

type = string

description = "The name of the team to be onboarded"

}

Then fill out the corresponding terraform.tfvars file:

production_folder_name = ""

nonproduction_folder_name = ""

development_folder_name = ""

project_prefix = ""

team_name = ""

Create the following provider.tf file and run terraform init:

provider "google" {}

terraform {

required_providers {

google = {

source = "hashicorp/google"

version = "~> 3"

}

}

}

Create the following main.tf file and run terraform plan:

data "google_folder" "production" {

folder = var.production_folder_name

}

data "google_folder" "nonproduction" {

folder = var.nonproduction_folder_name

}

data "google_folder" "development" {

folder = var.development_folder_name

}

resource "google_project" "production" {

name = "${var.team_name}Production"

project_id = "${var.project_prefix}-${var.team_name}-prod"

folder_id = data.google_folder.production.name

}

resource "google_project" "preproduction" {

name = "${var.team_name}PreProduction"

project_id = "${var.project_prefix}-${var.team_name}-preprod"

folder_id = data.google_folder.nonproduction.name

}

2.1 Scalable Project Structures on GCP | 21

resource "google_project" "development" {

name = "${var.team_name}Development"

project_id = "${var.project_prefix}-${var.team_name}-dev"

folder_id = data.google_folder.development.name

}

resource "google_project" "shared" {

name = "${var.team_name}Shared"

project_id = "${var.project_prefix}-${var.team_name}-shared"

folder_id = data.google_folder.production.name

}

Review the resources that are going to be created, and then run terraform apply to

make the changes.

Discussion

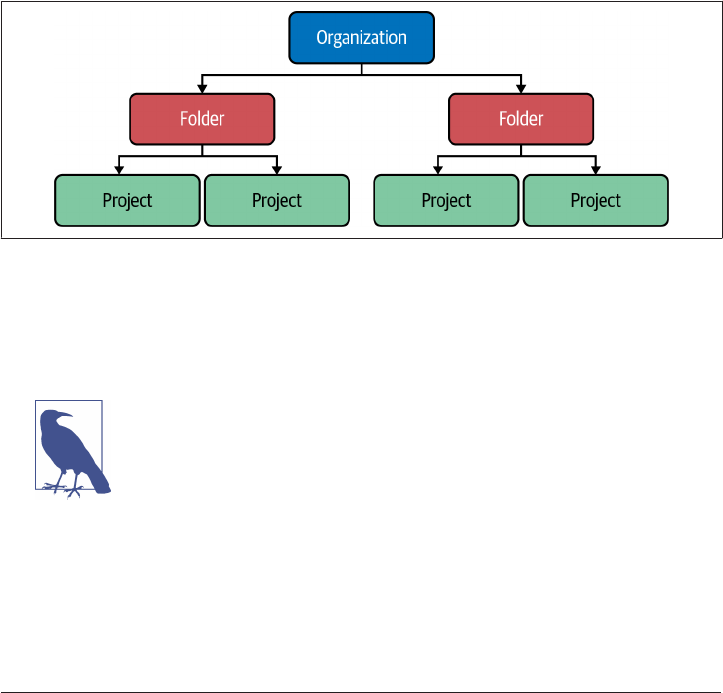

On Google Cloud Platform (GCP), you have a three-tier resource hierarchy that

allows you to define your organizational structure, as shown in Figure 2-2.

Figure 2-2. e organization hierarchy in GCP

Through a GCP organization, you are able to centrally manage identity (see Recipe

2.7), apply policies (see Recipe 2.4), and get visibility of security issues at scale (see

Recipe 3.1).

It is highly recommended that you have two organizations set up.

This allows you to test and measure the impact of changes made at

the organization level, e.g., enabling a new organization policy for

the entire organization.

22 | Chapter 2: Setting Up Accounts and Users

Core folders

Google best practice recommends five core folders you should have underneath your

organization:

•

Common, which contains the centralized and shared resources for the entire

organization. It can include the following projects:

— Billing, which gives centralized management of billing logs.

—

Logging, where you store an immutable copy of all logs for audit and investi‐

gation purposes.

— Interconnect, where you deploy the Cloud Interconnect and related resources

for hybrid networking.

— Secrets, for housing the organization-wide secrets allowing for centralized

access, management, and rotation.

— DNS Hub, for configuring peered DNS between Google Cloud and on-

premise.

—

Notifications, where you centrally configure alerting from a Security Com‐

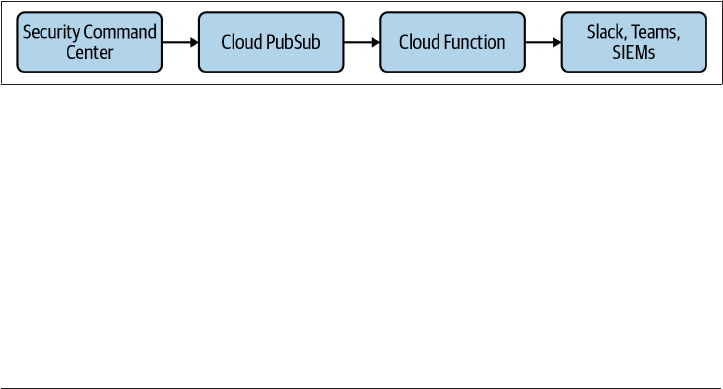

mand Center (see Recipe 3.1).

• Production, which holds all the projects containing production resources. In

addition to one or more projects for each production application, it can include

the following projects:

— Base Shared VPC, which hosts the base production shared VPC.

— Restricted Shared VPC, which hosts the restricted production shared VPC.

— Secrets, for housing the shared production secrets.

•

NonProd, which holds all the projects containing nonproduction resources. It

will have a nonproduction copy of all production projects.

• Dev, which holds all the projects containing development resources. It will have a

development copy of all production projects.

• Bootstrap, which contains the projects used to create the resource hierarchy. It

will contain these two projects:

— CI/CD, which houses the CI/CD pipeline to deploy the resource hierarchy.

— Seed, which contains the Terraform state and service account required for the

CI/CD pipeline to operate.

To create the Bootstrap folder and move the current project underneath it, copy and

apply the following Terraform. The current project then becomes the Seed project

outlined previously.

2.1 Scalable Project Structures on GCP | 23

resource "google_folder" "bootstrap" {

display_name = "Bootstrap"

parent = data.google_organization.this.name

}

resource "null_resource" "move_initial_project" {

provisioner "local-exec" {

command = "gcloud beta --quiet projects move ${var.project_id}" +

"--folder ${split("/", google_folder.bootstrap.id)[1]}"

}

}

Per workload projects

This recipe created the following four projects for the team onboarding to your GCP

organization:

• Production, which holds all the resources for the production instance of the

service:

— User access to this project should be disabled by default.

— All change should happen via infrastructure as code and CI/CD pipelines.

—

In an emergency, user access can be reenabled.

• Preproduction, which holds a full copy of production without production data.

— This project is used to test changes before promoting them to production.

—

Access to this project should be identical to production, by default through

automation.

— User changes result in differences between nonproduction and production,

which decreases testing efficacy, resulting in more production issues.

• Development, which is for developers to rapidly experiment against.

—

Developers should have direct access to this project.

— By allowing developers direct access, they are able to iterate much faster.

— Ideally, changes are made through locally executed infrastructure as code.

• Shared, which holds the shared infrastructure for the application.

— For example, DNS infrastructure and artifact repositories.

—

Changes here should be done via infrastructure as code through CI/CD

pipelines.

— If the CI/CD pipelines fail, then user access will be required to recover them.

24 | Chapter 2: Setting Up Accounts and Users

Strategies for converging organizations

Over time, it often becomes necessary to migrate projects between GCP organiza‐

tions. This can be due to mergers and acquisitions, business requirements necessitat‐

ing multiple organizations, or pulling together business unit–owned organizations

under centralized IT management.

To enable this, two folders are often used temporarily when migrating projects

between organizations: Import and Export. These folders are used during the

onboarding and offboarding process, respectively, and allow you to test that the

workloads in the projects operate as expected when moved. This also means that you

can explicitly only allow for the migration of projects that are contained within these

folders, allowing you to more closely observe the principle of least privilege.

As of the time of writing, migrating projects between organizations is an alpha fea‐

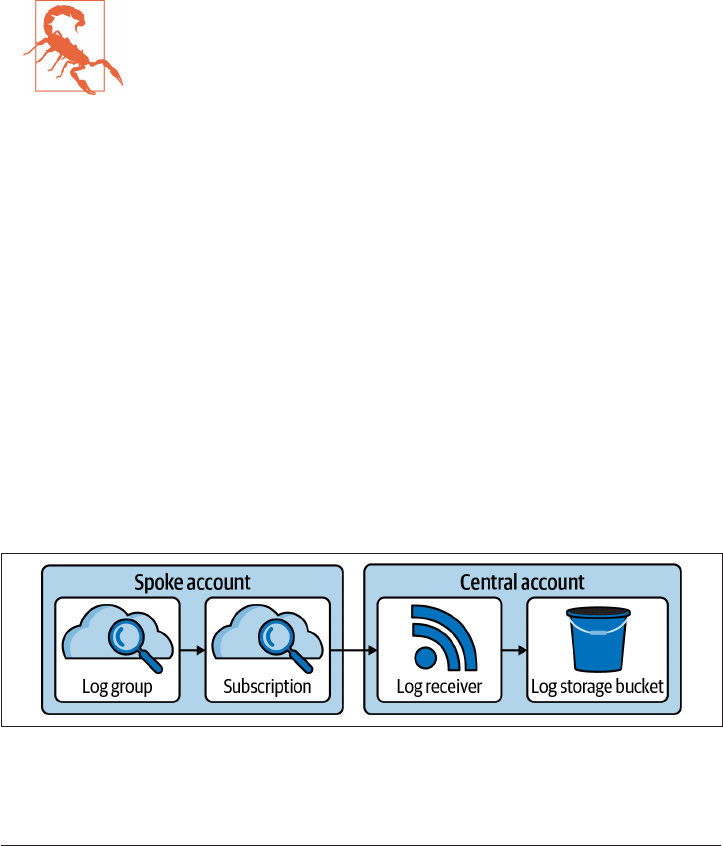

ture, whereas historically it required a support ticket to action. As this process is

becoming increasingly common among companies, let’s discuss the potential strate‐

gies that exist:

Migrate projects

Depending on the nature of the resources within a project, this can vary in diffi‐

culty from trivial to requiring significant amounts of planning. Using Cloud

Asset Inventory allows you to understand all the resources within a project, the

details of which can be exported to BigQuery, allowing you to run queries to

determine the required approach.

Migrate workloads

into fresh accounts. If the workload has been created with infrastructure as code

and its data is not cost prohibitive to move, it can be easier to simply stand the

workload up in a new project within the new organization. For a lot of develop‐

ment and test workloads that do not have full production databases, this can be

an easy solution to test that the new organization is configured to support pro‐

duction.

Upgrade the existing foundation

It is possible to maintain multiple organizations such that they function similarly

with high levels of infrastructure as code. This should only be selected as the

enduring option when it proves too complex or expensive to bring all workloads

under a single organization.

Maintain all organizations

Not considered an enduring option, based on business priorities, it can make

sense to allow two organizations to coexist and not make short-term investments

into merging them together. However, over the long term, this can prove very

2.1 Scalable Project Structures on GCP | 25

expensive as significant amounts of engineering effort must be expended to

maintain both to a sufficient standard.

26 | Chapter 2: Setting Up Accounts and Users

Summarizing the Recipe

Let’s summarize what was covered in this recipe:

•

GCP organizations are built from a root organization node, with folders and

projects underneath.

• A set of core folders suffice for the requirements of most organizations:

—

Bootstrap, where the initial project goes and the organization is modified

from.

— Common, where the core infrastructure pieces such as shared networking

exist.

— Production, where delivery teams are able to deploy their production

workloads.

— NonProduction, where delivery teams are able to deploy their nonproduction

workloads.

—

Development, where delivery teams are able to deploy their development

workloads.

• There are additional folders which organizations often use, and you learned

about having explicit Import and Export folders for managing moving projects

between organizations.

2.2 Scalable Account Structures on AWS

Problem

You need to deploy the accounts required to rapidly onboard a new team into your

organization.

Solution

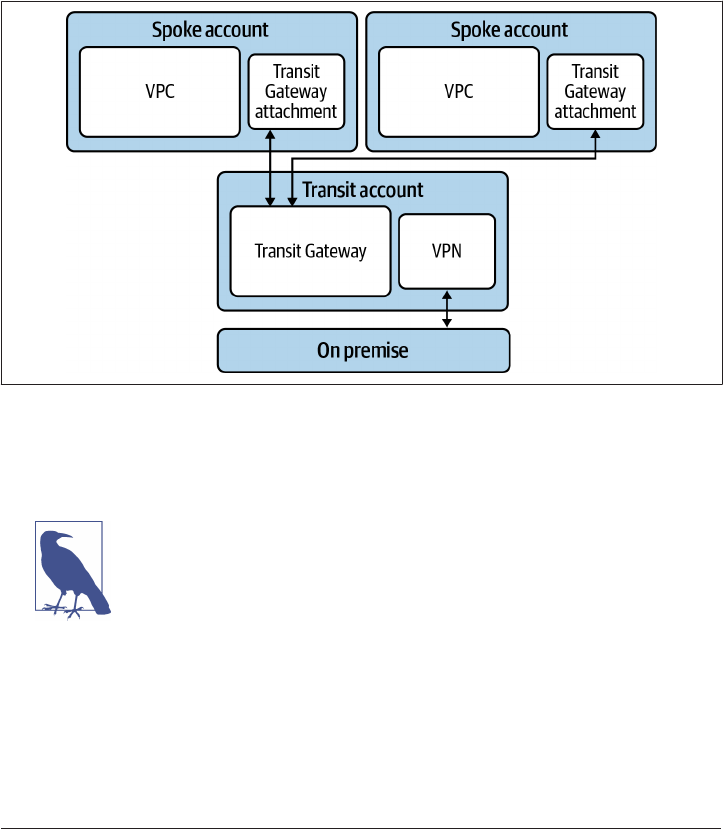

This recipe builds out four accounts, as shown in Figure 2-3.

2.2 Scalable Account Structures on AWS | 27

Figure 2-3. Recommended organization structure

If you haven’t already done so, familiarize yourself with Terraform and the different

authentication mechanisms in Chapter 11.

Create a variables.tf file and copy the following contents:

variable "production_ou_id" {

type = string

description = "The ID of the production OU"

}

variable "preproduction_ou_id" {

type = string

description = "The ID of the preproduction OU"

}

variable "development_ou_id" {

type = string

28 | Chapter 2: Setting Up Accounts and Users

description = "The ID of the development OU"

}

variable "team_name" {

type = string

description = "The name of the team to be onboarded"

}

variable "production_account_email" {

type = string

description = "The production root account email"

}

variable "preproduction_account_email" {

type = string

description = "The preproduction root account email"

}

variable "development_account_email" {

type = string

description = "The development root account email"

}

variable "shared_account_email" {

type = string

description = "The shared root account email"

}

Then fill out the corresponding terraform.tfvars file:

production_ou_id = ""

preproduction_ou_id = ""

development_ou_id = ""

team_name = ""

production_account_email = ""

preproduction_account_email = ""

development_account_email = ""

shared_account_email = ""

Create the following provider.tf file and run terraform init:

provider "aws" {}

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 3"

}

}

}

Create the following main.tf file and run terraform plan:

2.2 Scalable Account Structures on AWS | 29

resource "aws_organizations_organizational_unit" "team" {

name = var.team_name

parent_id = var.organizational_unit_parent_id

}

resource "aws_organizations_account" "production" {

name = "${var.team_name}-production"

email = var.production_account_email

parent_id = aws_organizations_organizational_unit.team.id

}

resource "aws_organizations_account" "preproduction" {

name = "${var.team_name}-preproduction"

email = var.preproduction_account_email

parent_id = aws_organizations_organizational_unit.team.id

}

resource "aws_organizations_account" "development" {

name = "${var.team_name}-development"

email = var.development_account_email

parent_id = aws_organizations_organizational_unit.team.id

}

resource "aws_organizations_account" "shared" {

name = "${var.team_name}-shared"

email = var.shared_account_email

parent_id = aws_organizations_organizational_unit.team.id

}

Review the resources that are going to be created, and then run terraform apply to

make the changes.

Discussion

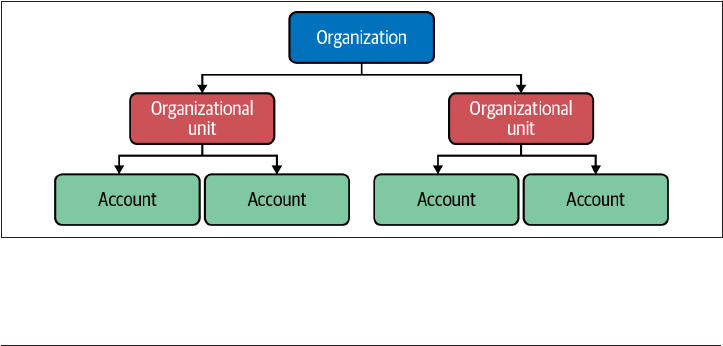

On AWS, cloud estates are built from three foundational resources—organizations,

organizational units, and accounts—which create the hierarchy shown in Figure 2-4.

Figure 2-4. e organization hierarchy in AWS

30 | Chapter 2: Setting Up Accounts and Users

The organization works as the root of the entire estate, and a number of security

activities are done at the organizational level, as it allows you to look holistically

across all accounts. Both Recipes 3.2 and 3.8 aggregate data from across the organiza‐

tion.

It is highly recommended that you have two AWS organizations.

This allows you to test and measure the impact of changes made at

the organization level, e.g., enabling a new service control policy

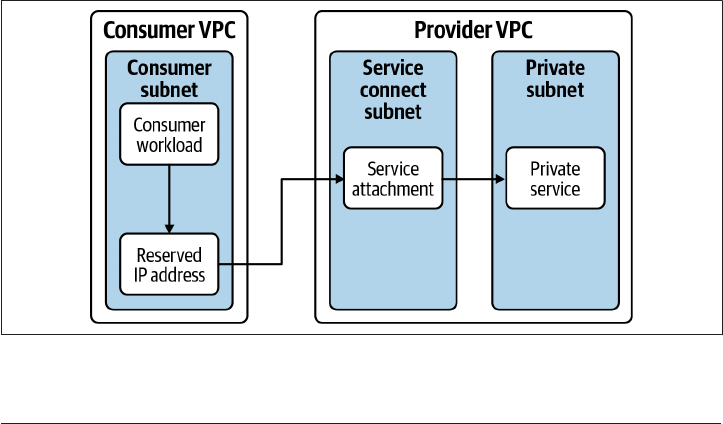

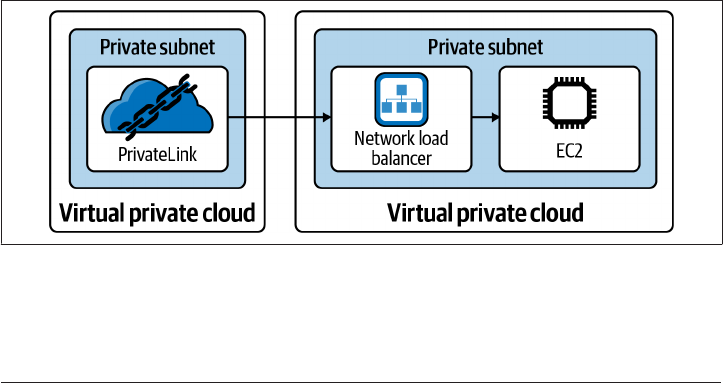

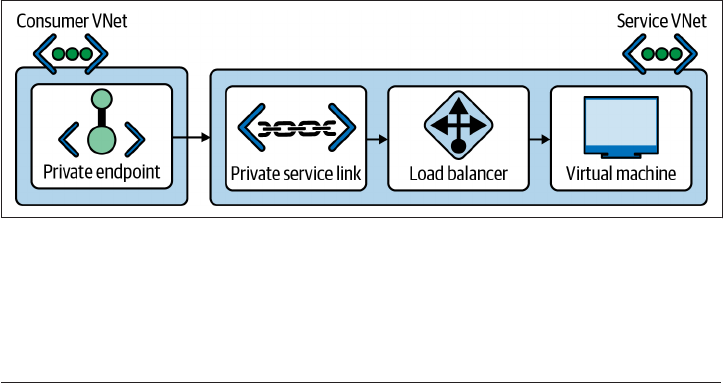

(SCP) for the entire organization, such as in Recipe 2.5.