With Early Release ebooks, you get books in their earliest

form—the author’s raw and unedited content as they write—

so you can take advantage of these technologies long before

the official release of these titles.

Je Carpenter and Patrick McFadin

Managing Cloud Native Data on

Kubernetes

Architecting Cloud Native Data Services using

Open Source Technology

Boston Farnham Sebastopol

Tokyo

Beijing Boston Farnham Sebastopol

Tokyo

Beijing

978-1-098-11139-7

[TK]

Managing Cloud Native Data on Kubernetes

by Jeff Carpenter and Patrick McFadin

Copyright © 2023 Jeff Carpenter and Patrick McFadin. All rights reserved.

Printed in the United States of America.

Published by O’Reilly Media, Inc., 1005 Gravenstein Highway North, Sebastopol, CA 95472.

O’Reilly books may be purchased for educational, business, or sales promotional use. Online editions are

also available for most titles (http://oreilly.com). For more information, contact our corporate/institutional

sales department: 800-998-9938 or corporate@oreilly.com.

Acquisitions Editor: Jessica Haberman

Development Editor: Jill Leonard

Production Editor: Caitlin Ghegan

Copyeditor: TK

Proofreader: TK

Indexer: TK

Interior Designer: David Futato

Cover Designer: Karen Montgomery

Illustrator: Kate Dullea

January 2023: First Edition

Revision History for the Early Release

2021-10-01: First release

See http://oreilly.com/catalog/errata.csp?isbn=9781098111397 for release details.

The O’Reilly logo is a registered trademark of O’Reilly Media, Inc. Managing Cloud Native Data on

Kubernetes, the cover image, and related trade dress are trademarks of O’Reilly Media, Inc.

The views expressed in this work are those of the authors, and do not represent the publisher’s views.

While the publisher and the authors have used good faith efforts to ensure that the information and

instructions contained in this work are accurate, the publisher and the authors disclaim all responsibility

for errors or omissions, including without limitation responsibility for damages resulting from the use of

or reliance on this work. Use of the information and instructions contained in this work is at your own

risk. If any code samples or other technology this work contains or describes is subject to open source

licenses or the intellectual property rights of others, it is your responsibility to ensure that your use

thereof complies with such licenses and/or rights.

This work is part of a collaboration between O’Reilly and Portworx. See our statement of editorial inde‐

pendence.

Table of Contents

1.

Introduction to Cloud Native Data Infrastructure: Persistence, Streaming, and Batch

Analytics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

Infrastructure Types 8

What is Cloud Native Data? 10

More Infrastructure, More Problems 12

Kubernetes Leading the Way 14

Managing Compute on Kubernetes 15

Managing Network on Kubernetes 15

Managing Storage on Kubernetes 16

Cloud native data components 16

Looking forward 17

Getting ready for the revolution 19

Adopt an SRE mindset 19

Embrace Distributed Computing 20

Summary 23

2.

Managing Data Storage on Kubernetes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

Docker, Containers, and State 26

Managing State in Docker 27

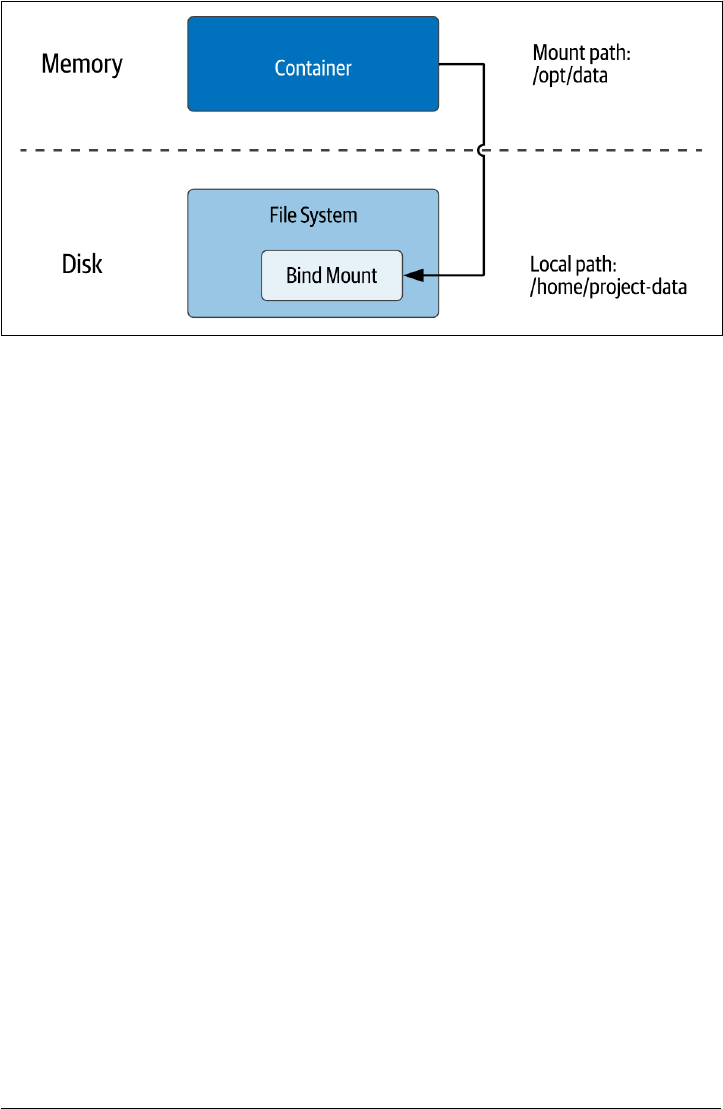

Bind mounts 27

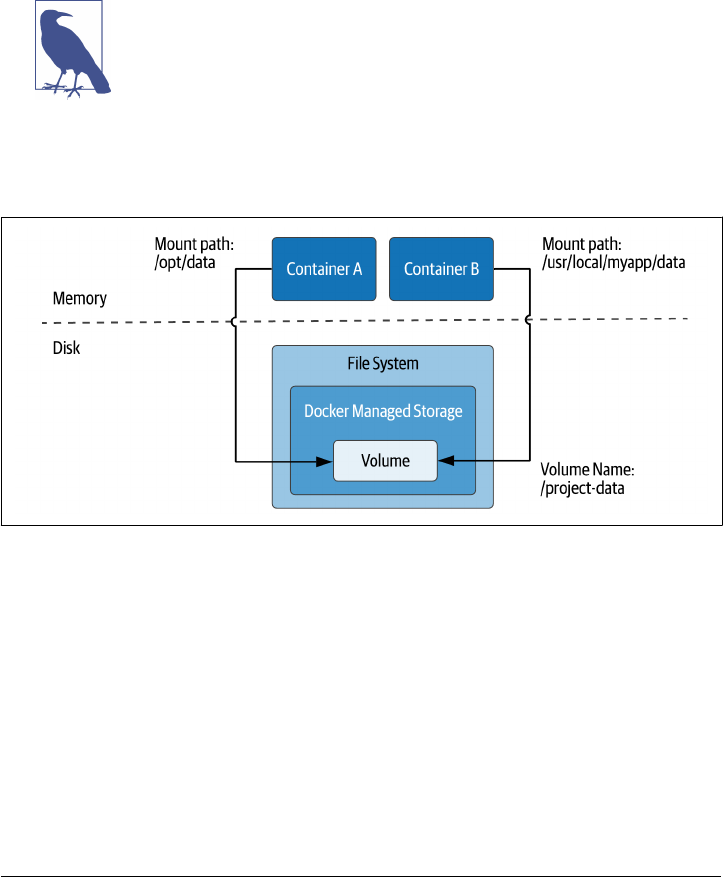

Volumes 28

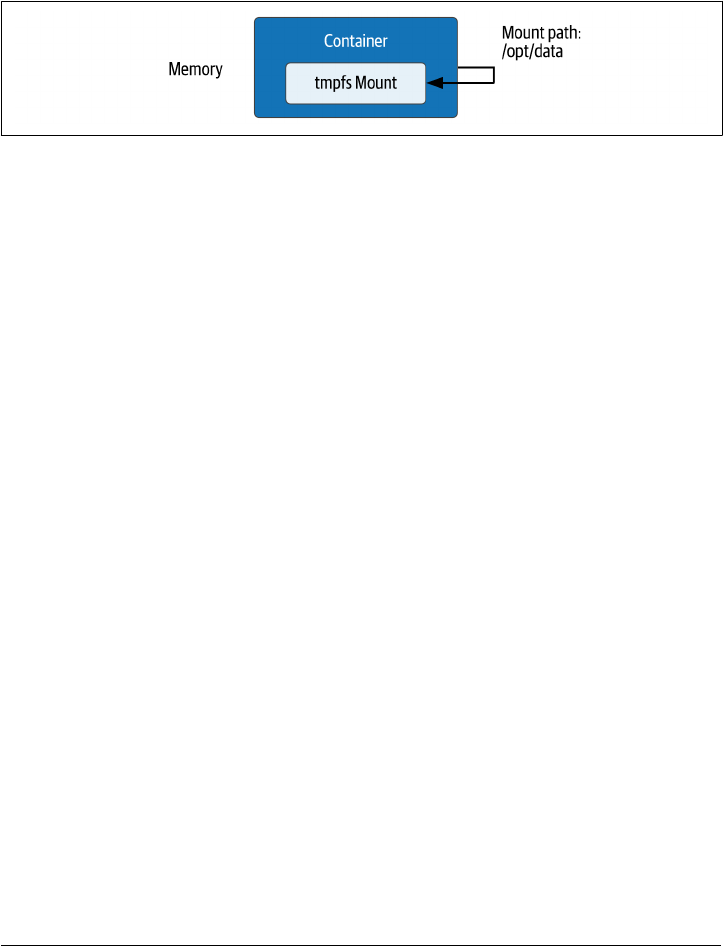

Tmpfs Mounts 29

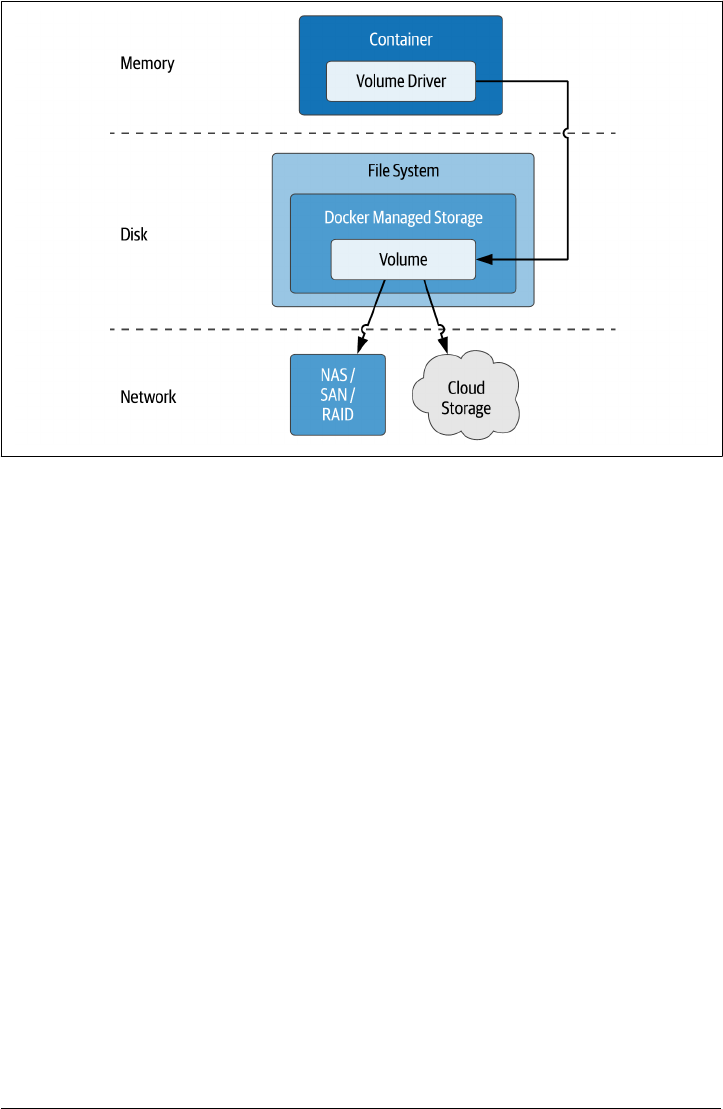

Volume Drivers 30

Sidebar: File, Block, and Object Storage 31

Kubernetes Resources for Data Storage 32

Pods and Volumes 32

PersistentVolumes 39

PersistentVolumeClaims 43

v

StorageClasses 46

Kubernetes Storage Architecture 48

Flexvolume 49

Container Storage Interface (CSI) 49

Container Attached Storage 51

Container Object Storage Interface (COSI) 54

Summary 56

3.

Databases on Kubernetes the Hard Way. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

The Hard Way 58

Prerequisites for running data infrastructure on Kubernetes 59

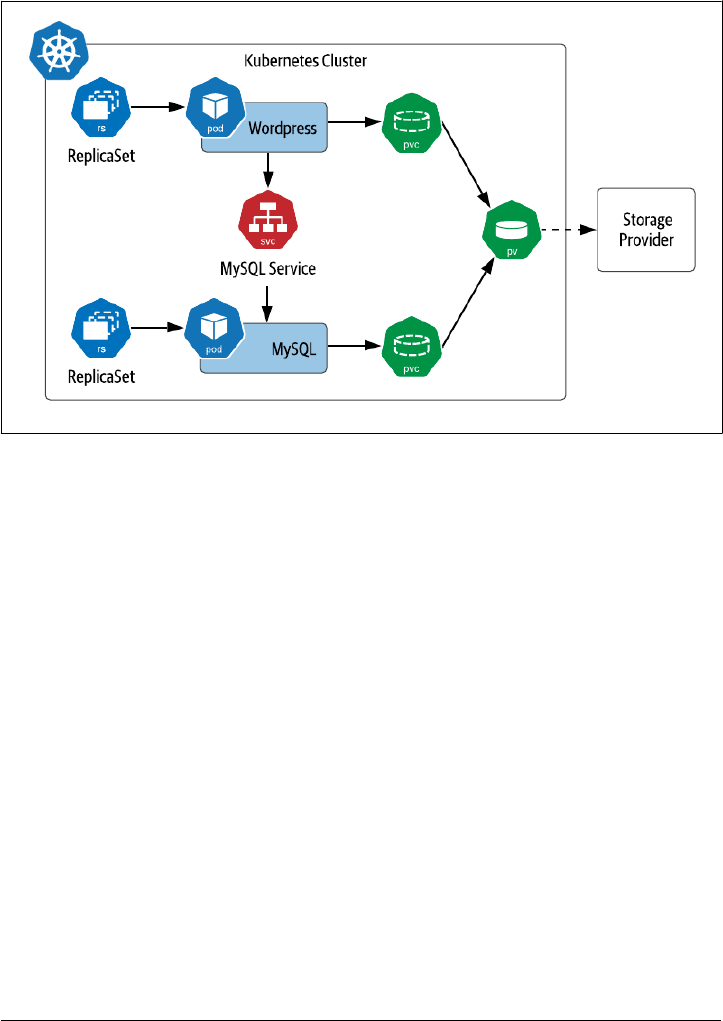

Running MySQL on Kubernetes 59

ReplicaSets 60

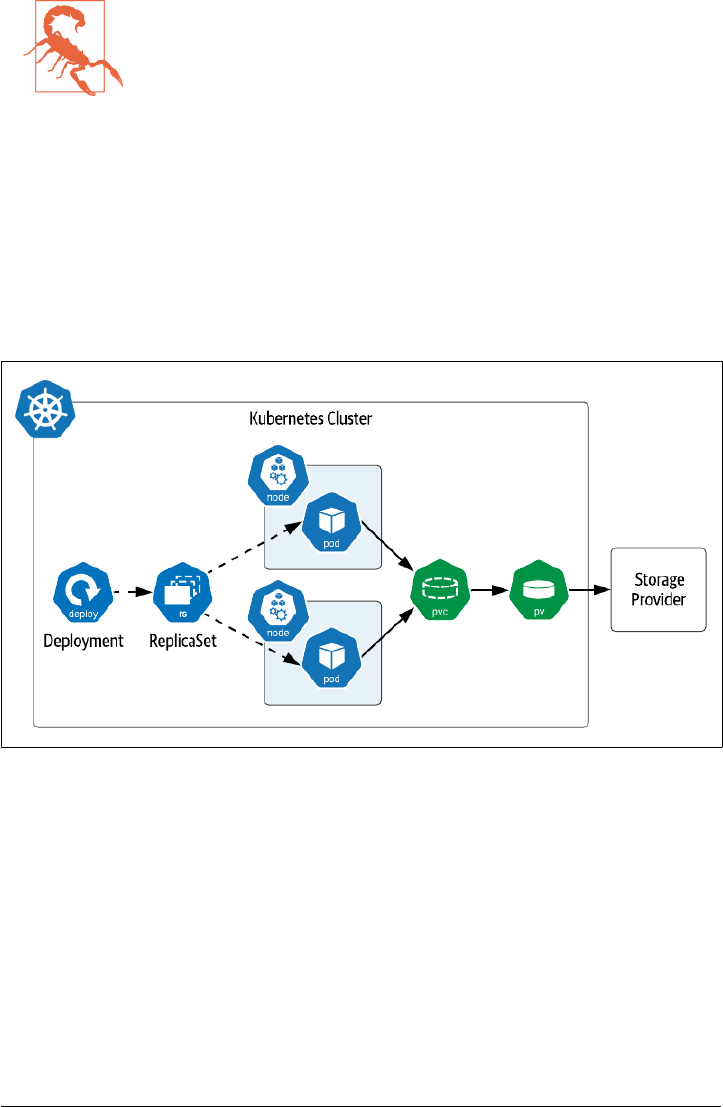

Deployments 62

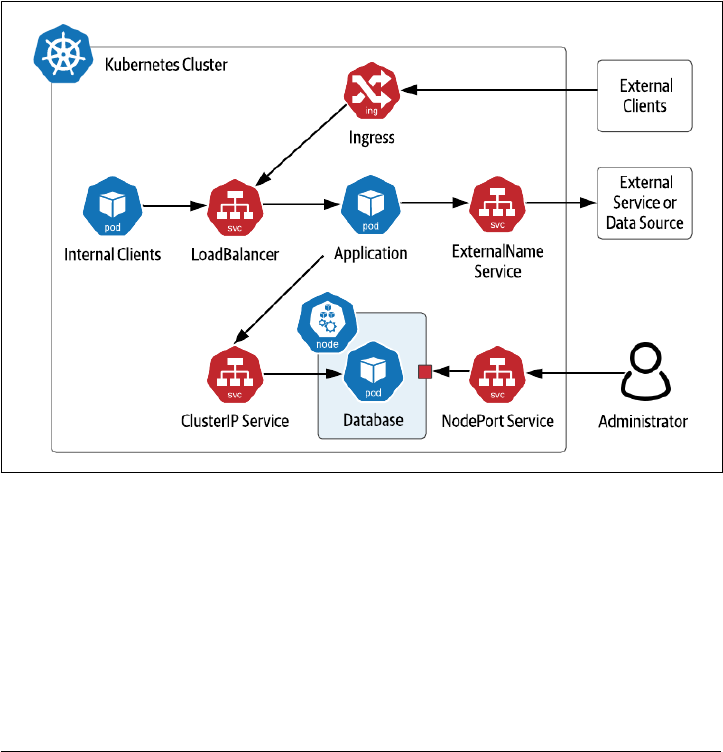

Services 66

Accessing MySQL 69

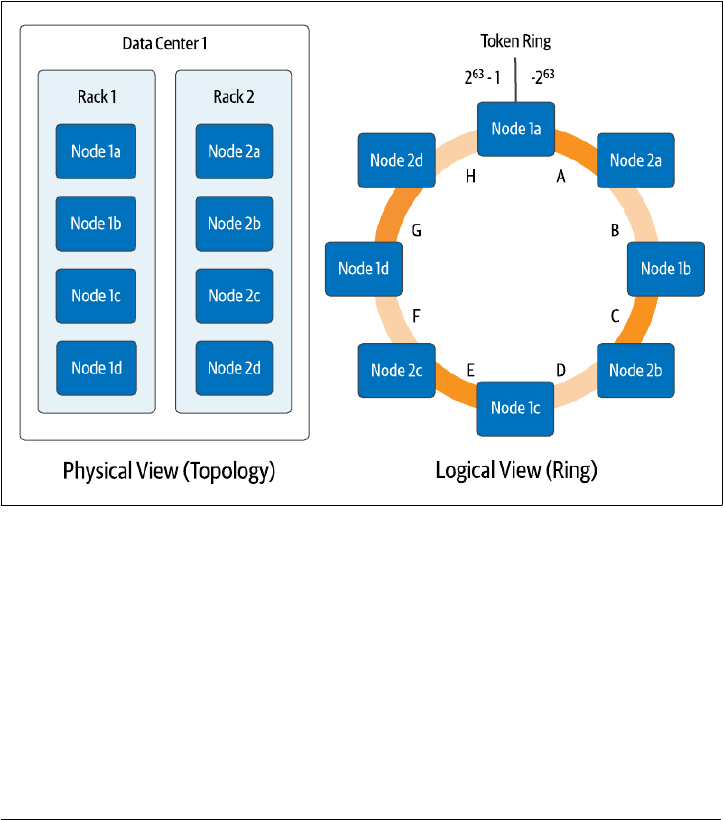

Running Apache Cassandra on Kubernetes 71

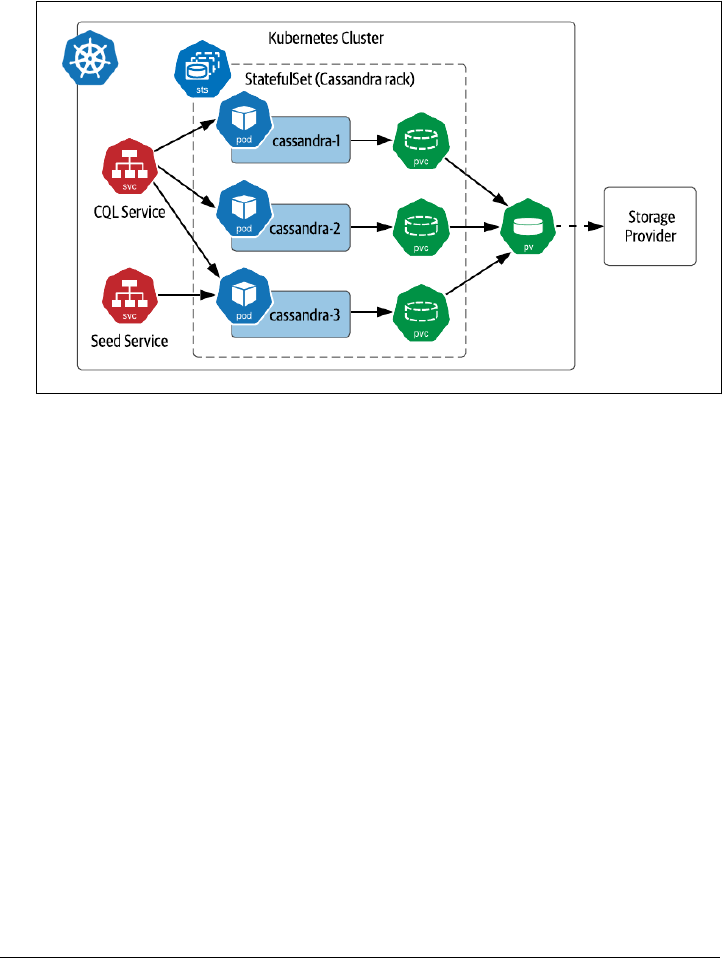

StatefulSets 73

Accessing Cassandra 84

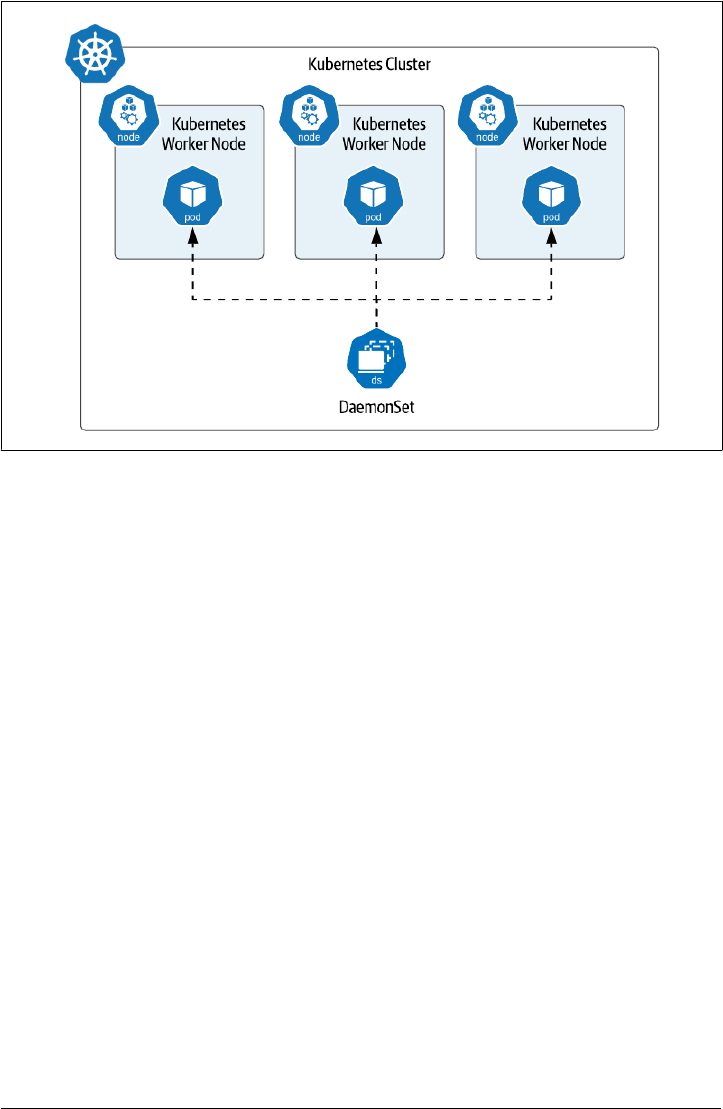

Sidebar: What about DaemonSets ? 85

Summary 86

Index. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 89

vi | Table of Contents

CHAPTER 1

Introduction to Cloud Native Data

Infrastructure: Persistence, Streaming, and

Batch Analytics

A Note for Early Release Readers

With Early Release ebooks, you get books in their earliest form—the author’s raw and

unedited content as they write—so you can take advantage of these technologies long

before the official release of these titles.

This will be the 1st chapter of the final book. Please note that the GitHub repo will be

made active later on.

If you have comments about how we might improve the content and/or examples in

this book, or if you notice missing material within this chapter, please reach out to the

authors at [email protected] (Jeff Carpenter) and [email protected] (Pat‐

rick McFadin).

Do you work at solving data problems and find yourself faced with the need for mod‐

ernization? Is your cloud native application limited to the use of microservices and

service mesh? If you deploy applications on Kubernetes without including data, you

haven’t fully embraced cloud native. Every element of your application should

embody the cloud native principles of scale, elasticity, self-healing, and observability,

including how you handle data. Engineers that work with data are primarily con‐

cerned with stateful services, and this will be our focus: increasing your skills to man‐

age data in Kubernetes. By reading this book, our goal is to enrich your journey to

cloud native data. If you are just starting with cloud native applications, then there is

7

no better time to include every aspect of the stack. This convergence is the future of

how we will consume cloud resources.

So what is this future we are creating together?

For too long, data has been something that has lived outside of Kubernetes, creating a

lot of extra effort and complexity. We will get into valid reasons for this, but now is

the time to combine the entire stack to build applications faster at the needed scale.

Based on current technology, this is very much possible. We’ve moved away from the

past of deploying individual servers and towards the future where we will be able to

deploy entire virtual data centers. Development cycles that once took months and

years can now be managed in days and weeks. Open source components can now be

combined into a single deployment on Kubernetes that is portable from your laptop

to the largest cloud provider.

The open source contribution isn’t a tiny part of this either. Kubernetes and the

projects we talk about in this book are under the Apache License 2.0. unless otherwise

noted. And for a good reason. If we build infrastructure that can run anywhere, we

need a license model that gives us the freedom of choice. Open source is both free-as-

in-beer and free-as-in-freedom, and both count when building cloud native applica‐

tions on Kubernetes. Open source has been the fuel of many revolutions in

infrastructure, and this is no exception.

That’s what we are building. This is the near future reality of fully realized Kubernetes

applications. The final component is the most important, and that is you. As a reader

of this book, you are one of the people that will create this future. Creating is what we

do as engineers. We continuously re-invent the way we deploy complicated infra‐

structure to respond to the increased demand. When the first electronic database sys‐

tem was put online in 1960 for American Airlines, you know there was a small army

of engineers who made sure it stayed online and worked around the clock. Progress

took us from mainframes to minicomputers, to microcomputers, and eventually to

the fleet management we find ourselves doing today. Now, that same progression is

continuing into cloud native and Kubernetes.

This chapter will examine the components of cloud native applications, the challenges

of running stateful workloads, and the essential areas covered in this book. To get

started, let’s turn to the building blocks that make up data infrastructure.

Infrastructure Types

In the past twenty years, the approach to infrastructure has slowly forked into two

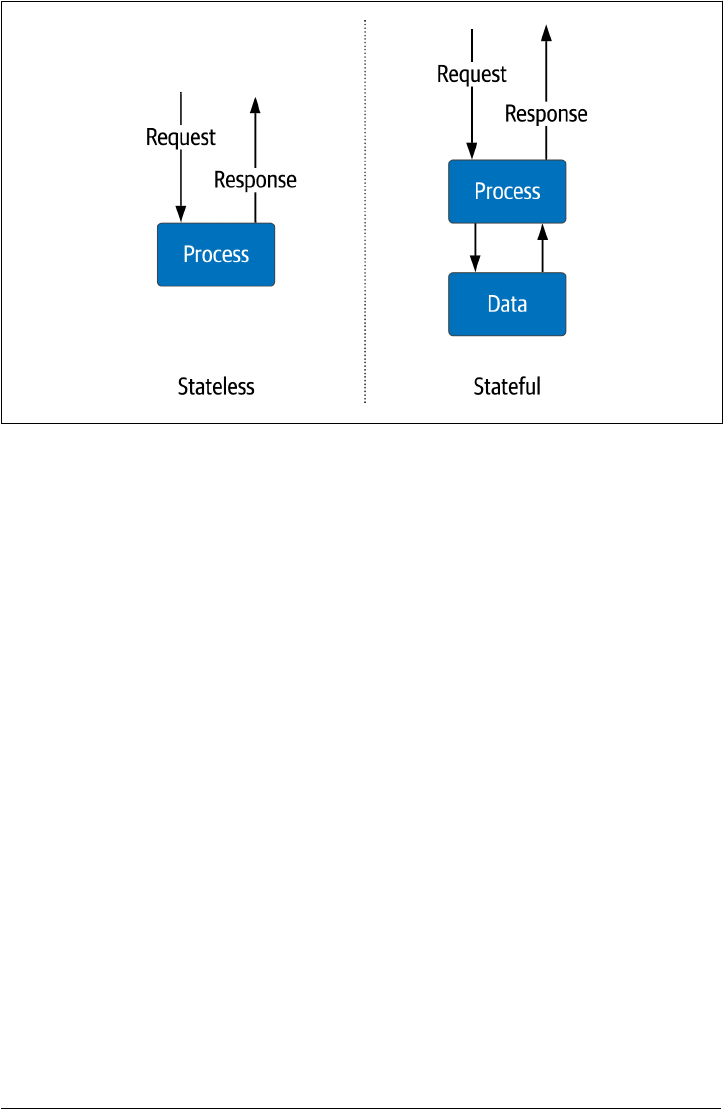

areas that reflect how we deploy distributed applications, as shown in Figure 1-1.

8 | Chapter 1: Introduction to Cloud Native Data Infrastructure: Persistence, Streaming, and Batch Analytics

Figure 1-1. Stateless vs. Stateful Services

Stateless services

These are services that maintain information only for the immediate life cycle of

the active request—for example, a service for sending formatted shopping cart

information to a mobile client. A typical example is an application server that

performs the business logic for the shopping cart. However, the information

about the shopping cart contents resides external to these services. They only

need to be online for a short duration from request to response. The infrastruc‐

ture used to provide the service can easily grow and shrink with little impact on

the overall application. Scaling compute and network resources on-demand

when needed. Since we are not storing critical data in the individual service, they

can be created and destroyed quickly with little coordination. Stateless services

are a crucial architecture element in distributed systems.

Stateful services

These services need to maintain information from one request to the next. Disks

and memory store data for use across multiple requests. An example is a database

or file system. Scaling stateful services is much more complex since the informa‐

tion typically requires replication for high availability, which creates the need for

consistency and mechanisms to keep data in sync. These services usually have

different scaling methods, both vertical and horizontal. As a result, they require

different sets of operational tasks than stateless services.

Infrastructure Types | 9

In addition to how information is stored, we’ve also seen a shift towards developing

systems that embrace automated infrastructure deployment. These recent advances

include:

•

Physical servers gave way to virtual machines that could be deployed and main‐

tained easily.

•

Virtual machines eventually became greatly simplified and focused on specific

applications to what we now call containers.

• Containers have allowed infrastructure engineers to package an application’s

operating system requirements into a single executable.

The use of containers has undoubtedly increased the consistency of deployments,

which has made it easier to deploy and run infrastructure in bulk. Few systems

emerged to orchestrate the explosion of containers like Kubernetes which is evident

in the incredible growth. This speaks to how well it solves the problem. According to

the Kubernetes documentation:

Kubernetes is a portable, extensible, open-source platform for managing containerized

workloads and services that facilitates both declarative configuration and automation.

It has a large, rapidly growing ecosystem. Kubernetes services, support, and tools are

widely available.

Kubernetes was originally designed for stateless workloads, and that is what it has tra‐

ditionally done best. Kubernetes has developed a reputation as a “platform for build‐

ing platforms” in a cloud-native way. However, there’s a reasonable argument that a

complete cloud-native solution has to take data into account. That’s the goal of this

book: exploring how we make it possible to build cloud-native data solutions on

Kubernetes. But first, let’s unpack what that term means.

What is Cloud Native Data?

Let’s begin defining the aspects of cloud native data that can help us with a final defi‐

nition. First, let’s start with the definition of cloud native from the Cloud Native

Computing Foundation (CNCF):

Cloud native technologies empower organizations to build and run scalable applica‐

tions in modern, dynamic environments such as public, private, and hybrid clouds.

Containers, service meshes, microservices, immutable infrastructure, and declarative

APIs exemplify this approach.

These techniques enable loosely coupled systems that are resilient, manageable, and

observable. Combined with robust automation, they allow engineers to make high-

impact changes frequently and predictably with minimal toil.

Note that this definition describes a goal state, desirable characteristics, and examples

of technologies that embody both. Based on this formal definition, we can synthesize

10 | Chapter 1: Introduction to Cloud Native Data Infrastructure: Persistence, Streaming, and Batch Analytics

the qualities that make a cloud native application differentiated from other types of

deployments in terms of how it handles data. Let’s take a closer look at these qualities.

Scalability

If a service can produce a unit of work for a unit of resources, then adding more

resources should increase the amount of work a service can perform. Scalability

is how we describe the service’s ability to apply additional resources to produce

additional work. Ideally, services should scale infinitely given an infinite amount

of resources of compute, network and storage. For data this means scale without

the need for downtime. Legacy systems required a maintenance period while

adding new resources which all services had to be shutdown. With the needs of

cloud native applications, downtime is no longer acceptable.

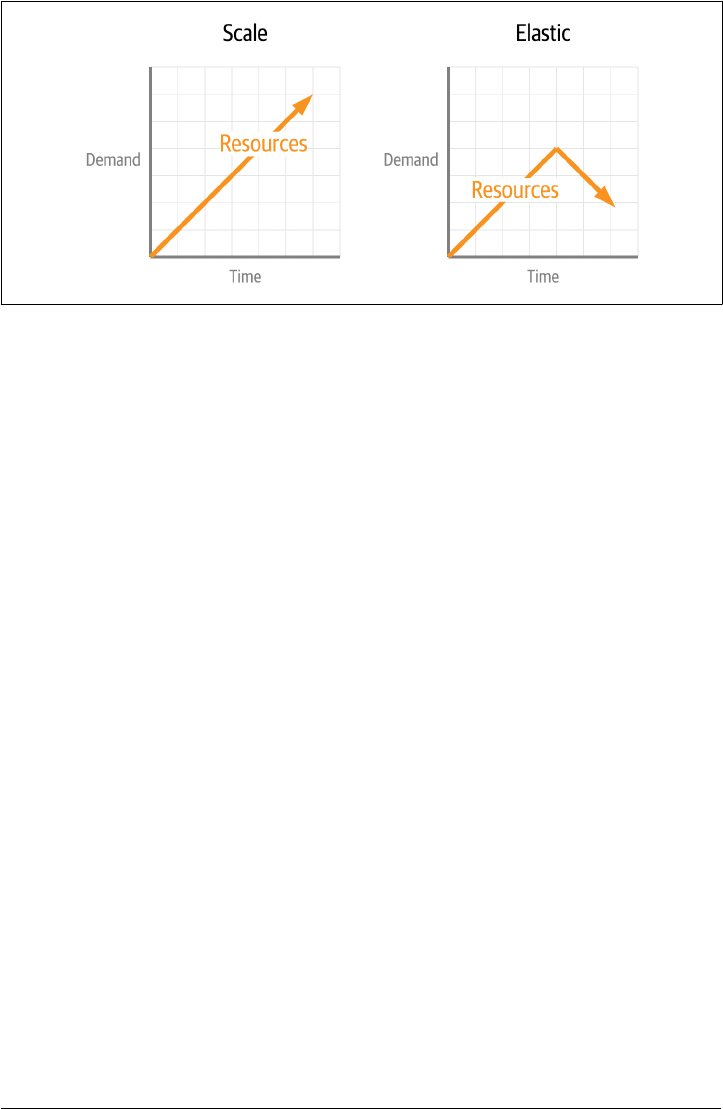

Elasticity

Where scale is adding resources to meet demand, elastic infrastructure is the

ability to free those resources when no longer needed. The difference between

scalability and elasticity is highlighted in Figure 1-2. Elasticity can also be called

on-demand infrastructure. In a constrained environment such as a private data

center, this is critical for sharing limited resources. For cloud infrastructure that

charges for every resource used, this is a way to prevent paying for running serv‐

ices you don’t need. When it comes to managing data, this means that we need

capabilities to reclaim storage space and optimize our use, such as moving older

data to less expensive storage tiers.

Self-healing

Bad things happen and when they do, how will your infrastructure respond? Self-

healing infrastructure will re-route traffic, re-allocate resources, and maintain

service levels. With larger and more complex distributed applications being

deployed, this is an increasingly important attribute of a cloud-native application.

This is what keeps you from getting that 3 AM wake-up call. For data, this means

we need capabilities to detect issues with data such as missing data and data qual‐

ity.

Observability

If something fails and you aren’t monitoring it, did it happen? Unfortunately, the

answer is not only yes, but that can be an even worse scenario. Distributed appli‐

cations are highly dynamic and visibility into every service is critical for main‐

taining service levels. Interdependencies can create complex failure scenarios

which is why observability is a key part of building cloud native applications. In

data systems the volumes that are commonplace need efficient ways of monitor‐

ing the flow and state of infrastructure. In most cases, early warning for issues

can help operators avoid costly downtime.

What is Cloud Native Data? | 11

Figure 1-2. Comparing Scalability and Elasticity

With all of the previous definitions in place, let’s try a definition that expresses these

properties.

Cloud Native Data

Cloud Native Data approaches empower organizations that have adopted the

cloud native application methodology to incorporate data holistically rather than

employ the legacy of people, process, technology, so that data can scale up and

down elastically, and promote observability and self-healing.

This is exemplified by containerized data, declarative data, data APIs, data-

meshes, and cloud-native data infrastructure (that is, databases, streaming, and

analytics technologies that are themselves architected as cloud-native applica‐

tions).

In order for data infrastructure to keep parity with the rest of our application, we

need to incorporate each piece. This includes automation of scale, elasticity and self-

healing, APIs are needed to decouple services and increase developer velocity, and

also the ability to observe the entire stack of your application to make critical deci‐

sions. Taken as a whole, your application and data infrastructure should appear as

one unit.

More Infrastructure, More Problems

Whether your infrastructure is in a cloud, on-premises, or both (commonly referred

to as hybrid), you could spend a lot of time doing manual configuration. Typing

things into an editor and doing incredibly detailed configuration work requires deep

knowledge of each technology. Over the past twenty years, there have been significant

advances in the DevOps community to code and how we deploy our infrastructure.

This is a critical step in the evolution of modern infrastructure. DevOps has kept us

ahead of the scale required, but just barely. Arguably, the same amount of knowledge

12 | Chapter 1: Introduction to Cloud Native Data Infrastructure: Persistence, Streaming, and Batch Analytics

is needed to fully script a single database server deployment. It’s just that now we can

do it a million times over if needed with templates and scripts. What has been lacking

is a connectedness between the components and a holistic view of the entire applica‐

tion stack. Foreshadowing: this is a problem that needed to be solved.

Like any good engineering problem, let’s break it down into manageable parts. The

first is resource management. Regardless of the many ways we have developed to

work at scale, fundamentally, we are trying to manage three things as efficiently as

possible: compute, network and storage, as shown in Figure 1-3. These are the critical

resources that every application needs and the fuel that’s burned during growth. Not

surprisingly, these are also the resources that carry the monetary component to a run‐

ning application. We get rewarded when we use the resources wisely and pay a literal

high price if we don’t. Anywhere you run your application, these are the most primi‐

tive units. When on-prem, everything is bought and owned. When using the cloud,

we’re renting.

Figure 1-3. Fundamental resources of cloud applications: compute, network, and storage

The second part of this problem is the issue of an entire stack acting as a single entity.

DevOps has already given us many tools to manage individual components, but the

connective tissue between them provides the potential for incredible efficiency. Simi‐

lar to how applications are packaged for the desktop but working at data center scales.

That potential has launched an entire community around cloud native applications.

These applications are very similar to what we have always deployed. The difference

is that modern cloud applications aren’t a single process with business logic. They are

a complex coordination of many containerized processes that need to communicate

securely and reliably. Storage has to match the current needs of the application but

remains aware of how it contributes to the stability of the application. When we think

of deploying stateless applications without data managed in the same control plane, it

sounds incomplete because it is. Breaking up your application components into dif‐

ferent control planes creates more complexity and is counter to the ideals of cloud

native.

More Infrastructure, More Problems | 13

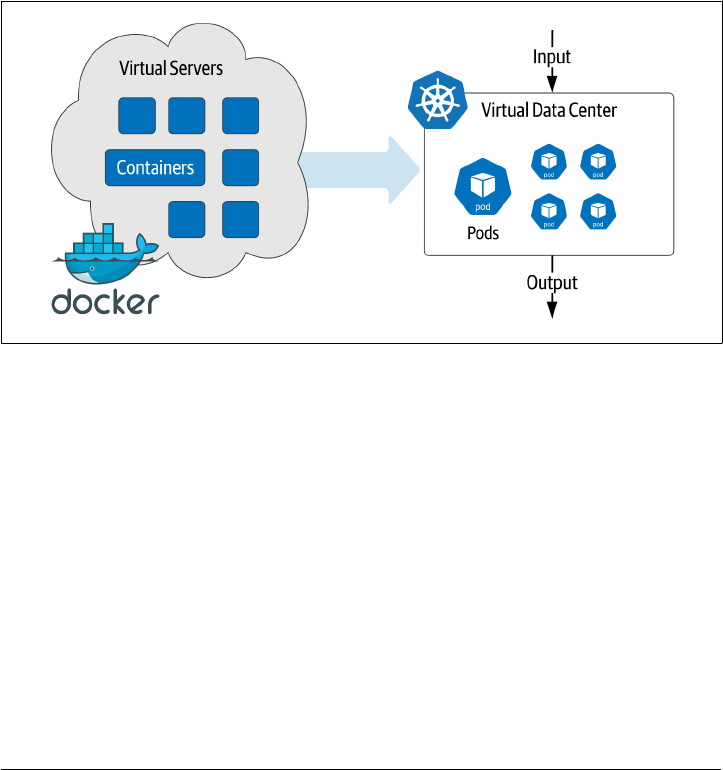

Kubernetes Leading the Way

As mentioned before, DevOps automation has kept us on the leading edge of meeting

scale needs. Containers created the need for much better orchestration, and the

answer has been Kubernetes. For operators, describing a complete application stack

in a deployment file makes a reproducible and portable infrastructure. This is because

Kubernetes has gone far beyond simply the deployment management that has been

popular in the DevOps tool bag. The Kubernetes control plane applies the deploy‐

ment requirement across the underlying compute, network, and storage to manage

the entire application infrastructure lifecycle. The desired state of your application is

maintained even when the underlying hardware changes. Instead of deploying virtual

machines, we are now deploying virtual datacenters as a complete definition as

shown in Figure 1-4.

Figure 1-4. Moving from virtual servers to virtual data centers

The rise in popularity of Kubernetes has eclipsed all other container orchestration

tools used in DevOps. It has overtaken every other way we deploy infrastructure, and

it will be even more so in the future. There’s no sign of it slowing down. However, the

bulk of early adoption was primarily in stateless services.

Managing data infrastructure at a large scale was a problem well before the move to

containers and Kubernetes. Stateful services like databases took a different track par‐

allel to the Kubernetes adoption curve. Many recommended that Kubernetes was the

wrong way to run stateful services based on an architecture that favored ephemeral

workloads. That worked until it didn’t and is now driving the needed changes in

Kubernetes to converge the application stack.

So what are the challenges of stateful services? Why has it been hard to deploy data

infrastructure with Kubernetes? Let’s consider each component of our infrastructure.

14 | Chapter 1: Introduction to Cloud Native Data Infrastructure: Persistence, Streaming, and Batch Analytics

Managing Compute on Kubernetes

In data infrastructure, counting on Moore’s law has made upgrading a regular event.

If you aren’t familiar, Moore’s law predicted that computing capacity doubles every 18

months. If your requirements double every 18 months, you can keep up by replacing

hardware. Eventually, raw compute power started leveling out. Vendors started

adding more processors and cores to keep up with Moore’s law, leading to single

server resource sharing with virtual machines and containers. Enabling us to tap into

the vast pools of computing power left stranded in islands of physical servers. Kuber‐

netes expanded the scope of compute resource management by considering the total

datacenter as one large resource pool across multiple physical devices.

Sharing compute resources with other services has been somewhat taboo in the data

world. Data workloads are typically resource intensive, and the potential of one ser‐

vice impacting another (known as the noisy neighbor problem) has led to policies of

keeping them isolated from other workloads. This one-size fits all approach elimi‐

nates the possibility for more significant benefits. First is the assumption that all data

service resource requirements are the same. Apache Pulsar™ brokers can have far

fewer requirements than an Apache Spark™ worker, and neither are similar to a sizea‐

ble MySQL instance used for OLAP reporting. Second, the ability to decouple your

underlying hardware from running applications gives operators a lot of undervalued

flexibility. Cloud native applications that need scale, elasticity, and self-healing need

what Kubernetes can deliver. Data is no exception.

Managing Network on Kubernetes

Building a distributed application, by nature, requires a reliable and secure network.

Cloud native applications increase the complexity of adding and subtracting services

making dynamic network configuration a new requirement. Kubernetes manages all

of this inside of your virtual data center automatically. When new services come

online, it’s like a virtual network team springs to action. IP addresses are assigned,

routes are created, DNS entries are added, then the virtual security team ensures fire‐

wall rules are in place, and when asked, TLS certificates provide end-to-end encryp‐

tion.

Data infrastructure tends to be far less dynamic than something like microservices. A

fixed IP with a hostname has been the norm for databases. Analytic systems like

Apache Flink™ are dynamic in processing but have fixed hardware addressing assign‐

ments. Quality of service is typically at the top of the requirements list and, as a

result, the desire for dedicated hardware and dedicated networks has turned adminis‐

trators off of Kubernetes.

The advantage of data infrastructure running in Kubernetes is less about the past

requirements and more about what’s needed for the future. Scaling resources dynami‐

cally can create a waterfall of dependencies. Automation is the only way to maintain

Kubernetes Leading the Way | 15

clean and efficient networks, which are the lifeblood of distributed stateless systems.

The future of cloud native applications will only include more components and new

challenges such as where applications run. We can add regulatory compliance and

data sovereignty to previous concerns about latency and throughput. The declarative

nature of Kubernetes networks make it a perfect fit for data infrastructure.

Managing Storage on Kubernetes

Any service that provides persistence or analytics over large volumes of data will need

the right kind of storage device. Early versions of Kubernetes considered storage a

basic commodity part of the stack and assumed that most workloads were ephemeral.

For data, this was a huge mismatch. If your Postgres data files get deleted every time a

container is moved, that just doesn’t work. Additionally, implementing the underlying

block storage can be a broad spectrum. From high performance NVMe disks to old

5400 RPM spinning disks. You may not know what you’ll get. Thankfully this was an

essential focus of Kubernetes over the past few years and has been significantly

improved.

With the addition of features like Storage Classes, it is possible to address specific

requirements for performance or capacity or both. With automation, we can avoid

the point when you don’t have enough of either. Avoiding surprises is the domain of

capacity management—both initializing the needed capacity and growing when

required. When you run out of capacity in your storage, everything grinds to a halt.

Coupling the distributed nature of Kubernetes with data storage opens up more pos‐

sibilities for self healing. Automated backups and snapshots keep you ready for

potential data loss scenarios. Placing compute and storage together to minimize

hardware failure risks and automatic recovery to the desired state when the inevitable

failure occurs. All of which makes the data storage aspects of Kubernetes much more

attractive.

Cloud native data components

Now that we have defined the resources consumed in cloud native applications let’s

clarify the types of data infrastructure that powers them. Instead of a comprehensive

list of every possible product, we’ll break them down into larger buckets with similar

characteristics.

Persistence

This is probably assumed when we talk about data infrastructure. Systems that

store data and provide access by some method of a query. Relational databases

like MySQL and Postgres. NoSQL systems like Cassandra and MongoDB. In the

world of Kubernetes these have been the strongest, last holdouts due to the strict‐

est resource requirements. This has been for good reasons too. Databases are

16 | Chapter 1: Introduction to Cloud Native Data Infrastructure: Persistence, Streaming, and Batch Analytics

usually critical to a running application and central to every other part of the sys‐

tem.

Streaming

The most basic function of streaming is facilitating the high-speed movement of

data from one point to another. Streaming systems provide a variety of delivery

semantics based on a use case. In some cases, data can be delivered to many cli‐

ents or when strict controls are needed, delivered only once. A further enhance‐

ment of streaming is the addition of processing. Altering or enhancing data while

in mid-transport. The need for faster insights into data has propelled streaming

analytics into mission critical status catching up with persistence systems for

importance. Examples of steaming systems that move data are Apache Flink™ and

Apache Kafka™, where processing system examples are Apache Flink™ and

Apache Storm™.

Batch Analytics

One of the first big data problems. Analyzing large sets of data to gain insights or

re-purpose into new data. Apache Hadoop™ was the first large scale system for

batch analytics that set the expectations around using large volumes of compute

and storage, coordinated in a way to produce a final result. Typically, these are

issued as jobs distributed throughout the cluster which is something that is found

in Apache Spark™. The concern with costs can be much more prevalent in these

systems due to the sheer volume of resources needed. Orchestration systems help

mitigate the costs by intelligent allocation.

Looking forward

There is a very compelling future with cloud native data, both with what we have

available today and what we can have in the future. The path we take between those

two points is up to us: the community of people responsible for data infrastructure.

Just as we have always done, we see a new challenge and take it on. There is plenty for

everyone to do here, but the result could be pretty amazing and raise the bar, yet

again.

A call for databases to modernize on Kubernetes

With Rick Vasquez, Senior Director, Strategic Initiatives, Western Digital

Kubernetes is the catalyst for this current macro trend of change. Data infrastructure

has to run the same as the rest of the application infrastructure. In a conference talk,

Rick Vasquez, a leader in data infrastructure for years, wrote an open letter to the

database community on the need for change. Here is a summary of that talk:

Looking forward | 17

This is something for anyone working with databases in the 2020s. Kubernetes is

leading the charge in building cloud native and distributed systems. Data systems

aren’t leveraging the full capacity and feature set possible if they were better integrated

with Kubernetes. I’m a convert from the “you should never run a database in a con‐

tainer” way of thinking. Now I think we should be pushing everybody to have the

main deployment in Kubernetes. My background has always been on scale enterprise

use cases. I don’t see this as a passing fad, I’m looking at the applicability to global

scale for some of the largest companies in the world.

One line of thinking we need to overcome is treating Kubernetes like an operating

system that enables other applications to run on it. That’s the wrong way to look at

running data workloads. If your system runs in a container, then of course it will

work on Kubernetes, right? No! It will react to how the control plane deploys and

runs your application, and it may or may not be what you want. What if data systems

were more tightly integrated with Kubernetes and could offload functions to be han‐

dled by the Kubernetes control plane? Service discovery, load balancing, storage

orchestration, automated rollouts, and rollbacks, automated bin packing, self-healing,

secret and config management are all powerful things that allow for you to have a

consistent developer and SRE experience. The name of the game with Kubernetes is

driving consistency. You can use Kubernetes to become globally consistent across all

your deployments and do them the same way over and over. But that needs to include

database systems. Imagine if you have Postgres, MongoDB, MySQL, or Cassandra and

it was built natively on Kubernetes. What would you do?

Having the access to use different storage tiers, either local or remote disk. All of it

declarative in some configuration objects. I want to configure that in and with the

database. If I’m using MySQL, I want logs to be on the local disk, because I don’t want

any bottlenecks. I want certain tables to be on a slower disk that may be over the net‐

work. And, I want the last seven days of data to be in hot, local NVMe disk. Using

every single bit of capacity that you have with replicas actually doing things like off‐

loading reads or multiple write nodes, and one big aggregate for analytics. All of those

things should be possible with a Kubernetes based deployment with a cloud native

database.

Databases don’t reason about or have an opinion about how big they are. If you make

it bigger, it just needs more resources. You can set up auto-scaling to get you bigger or

horizontal scaling. What happens whenever you want to use the true elasticity that’s

given to you by Kubernetes? It’s not just the scale up and out. It’s the scale back and

down! Why don’t databases just do that? That is so important to maximize the value

that you’re getting out of a Kubernetes based deployment or more broadly, a cloud

native based deployment. We have a lot of work to do but the future is worth it.

This talk was specifically about databases, but we can extrapolate his call to action for

our data infrastructure running on Kubernetes. Unlike deploying a data application

18 | Chapter 1: Introduction to Cloud Native Data Infrastructure: Persistence, Streaming, and Batch Analytics

on physical servers, introducing the Kubernetes control plane requires a conversation

with the services it runs.

Getting ready for the revolution

As engineers that create and run data infrastructure, we have to be ready for the

changes coming. Both in how we operate and the mindset we have about the role of

data infrastructure. The following sections are meant to describe what you can do to

be ready for the future of cloud native data running in Kubernetes.

Adopt an SRE mindset

The role of Site Reliability Engineer (SRE) has grown with the adoption of cloud

native methodologies. If we intend our infrastructure to converge, we as data infra‐

structure engineers must learn new skills and adopt new practices.

Site reliability engineering is a set of principles and practices that incorporates aspects

of software engineering and applies them to infrastructure and operations problems.

The main goals are to create scalable and highly reliable software systems. Site relia‐

bility engineering is closely related to DevOps, a set of practices that combine soft‐

ware development and IT operations, and SRE has also been described as a specific

implementation of DevOps.

Deploying data infrastructure has been primarily concerned with the specific compo‐

nents deployed - the “what.” For example, you may find yourself focused on deploy‐

ing MySQL at scale or using Apache Spark to analyze large volumes of data. Adopting

an SRE mindset means going beyond what you are deploying and putting a greater

focus on the how. How will all of the pieces work together to meet the goals of the

application? A holistic view of a deployment considers how each piece will interact,

the required access including security, and the observability of every aspect to ensure

meeting service levels.

If your current primary or secondary role is Database Administrator, there is no bet‐

ter time to make the transition. The trend on LinkedIn shows a year-over-year

decrease in the DBA role and a massive increase for SREs. Engineers that have

learned the skills required to run critical database infrastructure have an essential

baseline that translates into what’s needed to manage cloud native data. These

include:

• Availability

• Latency

• Change Management

•

Emergency response

Getting ready for the revolution | 19

• Capacity Management

New skills need to be added to this list to become better adapted to the more signifi‐

cant responsibility of the entire application. These are skills you may already have,

but they include:

CI/CD pipelines

Embrace the big picture of taking code from repository to production. There’s

nothing that accelerates application development more in an organization. Con‐

tinuous Integration (CI) builds new code into the application stack and auto‐

mates all testing to ensure quality. Continuous Deployment (CD) takes the fully

tested and certified builds and automatically deploys them into production. Used

in combination (Pipeline), organizations can drastically increase developer veloc‐

ity and productivity.

Observability

Monitoring is something anyone with experience in infrastructure is familiar

with. In the “what” part of DevOps you know services are healthy and have the

information needed to diagnose problems. Observability expands monitoring

into the “how” of your application by considering everything as a whole. For

example, tracing the source of latency in a highly distributed application by giv‐

ing insight into every hop data takes.

Knowing the code

When things go bad in a large distributed application it’s not always a process

failure. In many cases, it could be a bug in the code or subtle implementation

detail. Being responsible for the entire health of the application, you will need to

understand the code that is executing in the provided environment. Properly

implemented observability will help you find problems and that includes the

software instrumentation. SREs and development teams need to have clear and

regular communication and code is common ground.

Embrace Distributed Computing

Deploying your applications in Kubernetes means embracing all of what distributed

computing offers. When you are accustomed to single system thinking, it can be a

hard transition. Mainly in the shift in thinking around expectations and understand‐

ing where problems crop up. For example, with every process contained in a single

system, latency will be close to zero. It’s not what you have to manage. CPU and

memory resources are the primary concern there. In the 1990s, Sun Microsystems

was leading in the growing field of distributed computing and published this list of

common fallacies:

1.

The network is reliable

20 | Chapter 1: Introduction to Cloud Native Data Infrastructure: Persistence, Streaming, and Batch Analytics

2. Latency is zero

3. Bandwidth is infinite

4. The network is secure

5.

Topology doesn’t change

6. There is one administrator

7.

Transport cost is zero

8. The network is homogeneous

These items most likely have an interesting story behind them where somebody

assumed one of these fallacies and found themselves very disappointed. The result

wasn’t what they expected and endless hours were lost trying to figure out the wrong

problem.

Embracing distributed methodologies is worth the effort in the long run. It is how we

build large scale applications and will be for a very long time. The challenge is worth

the reward, and for those of us who do this daily, it can be a lot of fun too! Kubernetes

applications will test each of these fallacies given its default distributed nature. When

you plan your deployment, considering things such as the cost of transport from one

place to another or latency implications. They will save you a lot of wasted time and

re-design.

Principles of Cloud Native Data Infrastructure

As engineering professionals, we seek standards and best-practices to build upon. To

make data the most “cloud native” it can be, we need to embrace everything Kuber‐

netes offers. A truly cloud native approach means adopting key elements of the

Kubernetes design paradigm and building from there. An entire cloud native applica‐

tion that includes data must be one that can run effectively on Kubernetes. Let’s

explore a few Kubernetes design principles that point the way.

Principle 1: Leverage compute, network, and storage as commodity APIs

One of the keys to the success of cloud computing is the commoditization of compu‐

tation, networking, and storage as resources we can provision via simple APIs. Con‐

sider this sampling of AWS services.

Compute

We allocate virtual machines through EC2 and Autoscaling Groups (ASGs).

Network

We manage traffic using Elastic Load Balancers (ELB), Route 53, and VPC peer‐

ing.

Getting ready for the revolution | 21

Storage

We persist data using options such as the Simple Storage Service (S3) for long-

term object storage, or Elastic Block Storage (EBS) volumes for our compute

instances.

Kubernetes offers its own APIs to provide similar services for a world of container‐

ized applications:

Compute

Pods, Deployments, and ReplicaSets manage the scheduling and life cycle of con‐

tainers on computing hardware.

Network

Services and Ingress expose a container’s networked interfaces.

Storage:

PersistentVolumes and Statefulets enable flexible association of containers to

storage.

Kubernetes resources promote the portability of applications across Kubernetes dis‐

tributions and service providers. What does this mean for databases? They are simply

applications that leverage computation, networking, and storage resources to provide

the services of data persistence and retrieval:

Compute

A database needs sufficient processing power to process incoming data and quer‐

ies. Each database node is deployed as a pod and grouped in StatefulSets, ena‐

bling Kubernetes to manage scaling out and scaling in.

Network

A database needs to expose interfaces for data and control. We can use Kuber‐

netes Services and Ingress Controllers to expose these interfaces.

Storage

A database uses persistent volumes of a specified storage class to store and

retrieve data.

Thinking of databases in terms of their compute, network, and storage needs removes

much of the complexity involved in deployment on Kubernetes.

Principle 2: Separate the control and data planes

Kubernetes promotes the separation of control and data planes. The Kubernetes API

server is the key data plane interface used to request computing resources, while the

control plane manages the details of mapping those requests onto an underlying IaaS

platform.

22 | Chapter 1: Introduction to Cloud Native Data Infrastructure: Persistence, Streaming, and Batch Analytics

We can apply this same pattern to databases. For example, a database data plane con‐

sists of ports exposed for clients, and for distributed databases, ports used for com‐

munication between database nodes. The control plane includes interfaces provided

by the database for administration and metrics collection and tooling that performs

operational maintenance tasks. Much of this capability can and should be imple‐

mented via the Kubernetes operator pattern. Operators define custom resources

(CRDs) and provide control loops that observe the state of those resources and take

actions to move them toward the desired state, helping extend Kubernetes with

domain-specific logic.

Principle 3: Make observability easy

The three pillars of observable systems are logging, metrics, and tracing. Kubernetes

provides a great starting point by exposing the logs of each container to third-party

log aggregation solutions. There are multiple solutions available for metrics, tracing,

and visualization, and we’ll explore several of them in this book.

Principle 4: Make the default

conguration secure

Kubernetes networking is secure by default: ports must be explicitly exposed in order

to be accessed externally to a pod. This sets a valuable precedent for database deploy‐

ment, forcing us to think carefully about how each control plane and data plane inter‐

face will be exposed and which interfaces should be exposed via a Kubernetes Service.

Kubernetes also provides facilities for secret management which can be used for shar‐

ing encryption keys and configuring administrative accounts.

Principle 5: Prefer declarative

conguration

In the Kubernetes declarative approach, you specify the desired state of resources,

and controllers manipulate the underlying infrastructure in order to achieve that

state. Operators for data infrastructure can manage the details of how to scale up

intelligently, for example, deciding how to reallocate shards or partitions when scal‐

ing out additional nodes or selecting which nodes to remove to scale down elastically.

The next generation of operators should enable us to specify rules for stored data size,

number of transactions per second, or both. Perhaps we’ll be able to specify maxi‐

mum and minimum cluster sizes, and when to move less frequently used data to

object storage. This will allow for more automation and efficiency in our data infra‐

structure.

Summary

At this point, we hope you are ready for the exciting journey in the pages ahead. The

move to cloud native applications must include data, and to do this, we will leverage

Kubernetes to include stateless and stateful services. This chapter covered cloud

Summary | 23

native data infrastructure that can scale elastically and resist any downtime due to

system failures and how to build these systems. We as engineers must embrace the

principles of cloud native infrastructure and in some cases, learn new skills. Congrat‐

ulations, you have begun a fantastic journey into the future of building cloud native

applications. Turn the page, and let’s go!

24 | Chapter 1: Introduction to Cloud Native Data Infrastructure: Persistence, Streaming, and Batch Analytics

CHAPTER 2

Managing Data Storage on Kubernetes

A Note for Early Release Readers

With Early Release ebooks, you get books in their earliest form—the author’s raw and

unedited content as they write—so you can take advantage of these technologies long

before the official release of these titles.

This will be the 2nd chapter of the final book. Please note that the GitHub repo will be

made active later on.

If you have comments about how we might improve the content and/or examples in

this book, or if you notice missing material within this chapter, please reach out to the

authors at [email protected] (Jeff Carpenter) and [email protected] (Pat‐

rick McFadin).

“There is no such thing as a stateless architecture. All applications store state some‐

where” - Alex Chircop, CEO, StorageOS

In the previous chapter, we painted a picture of a possible near future with powerful,

stateful, data-intensive applications running on Kubernetes. To get there, we’re going

to need data infrastructure for persistence, streaming, and analytics, and to build out

this infrastructure, we’ll need to leverage the primitives that Kubernetes provides to

help manage the three commodities of cloud computing: compute, network, and

storage. In the next several chapters we begin to look at these primitives, starting with

storage, in order to see how they can be combined to create the data infrastructure we

need.

To echo the point raised by Alex Chircop in the quote above, all applications must

store their state somewhere, which is why we’ll focus in this chapter on the basic

abstractions Kubernetes provides for interacting with storage. We’ll also look at the

25

emerging innovations being offered by storage vendors and open source projects that

are creating storage infrastructure for Kubernetes that itself embodies cloud-native

principles.

Let’s start our exploration with a look at managing persistence in containerized appli‐

cations in general and use that as a jumping off point for our investigation into data

storage on Kubernetes.

Docker, Containers, and State

The problem of managing state in distributed, cloud-native applications is not unique

to Kubernetes. A quick search will show that stateful workloads have been an area of

concern on other container orchestration platforms such as Mesos and Docker

Swarm. Part of this has to do with the nature of container orchestration, and part is

driven by the nature of containers themselves.

First, let’s consider containers. One of the key value propositions of containers is their

ephemeral nature. Containers are designed to be disposable and replaceable, so they

need to start quickly and use as few resources for overhead processing as possible. For

this reason, most container images are built from base images containing stream‐

lined, Linux-based, open-source operating systems such as Ubuntu, that boot quickly

and incorporate only essential libraries for the contained application or microservice.

As the name implies, containers are designed to be self-contained, incorporating all

their dependencies in immutable images, while their configuration and data is exter‐

nalized. These properties make containers portable so that we can run them any‐

where a compatible container runtime is available.

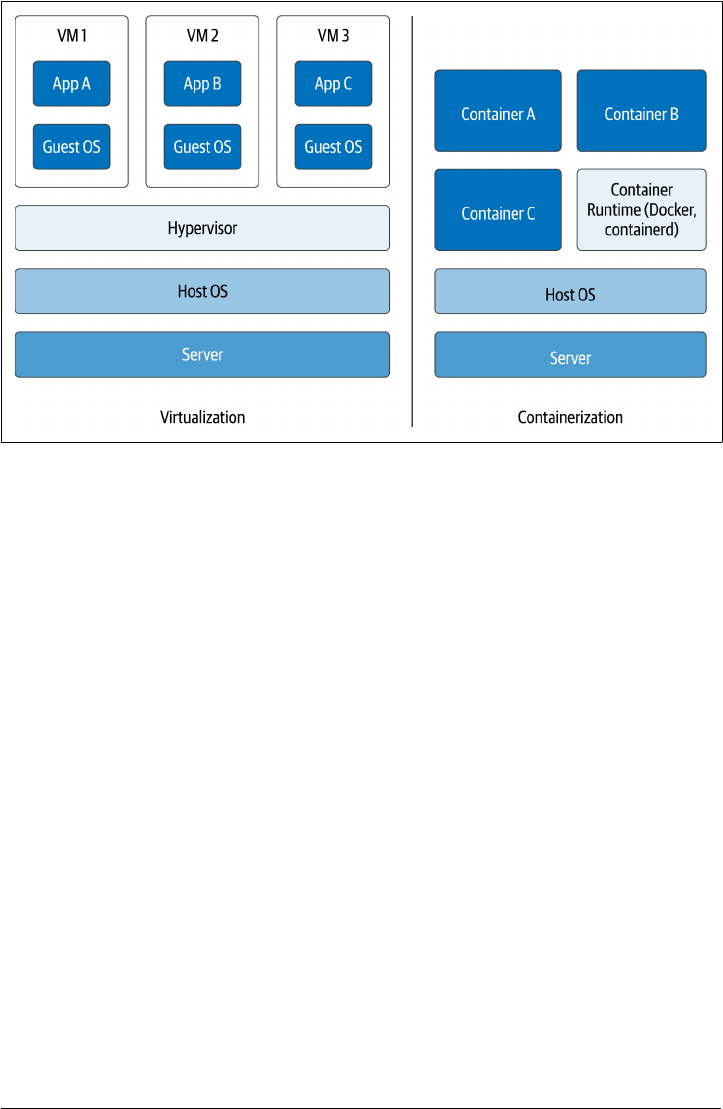

As shown in Figure 2-1, containers require less overhead than traditional virtual

machines, which run a guest operating system per virtual machine, with a hypervisor

layer to implement system calls onto the underlying host operating system.

26 | Chapter 2: Managing Data Storage on Kubernetes

Figure 2-1. Comparing containerization to virtualization

Although containers have made applications more portable, it’s proven a bigger chal‐

lenge to make their data portable. We’ll examine the idea of portable data sets in

Chapter 12. Since a container itself is ephemeral, any data that is to survive beyond

the life of the container must by definition reside externally. The key feature for a

container technology is to provide mechanisms to link to persistent storage, and the

key feature for a container orchestration technology is the ability to schedule contain‐

ers in such a way that they can access persistent storage efficiently.

Managing State in Docker

Let’s take a look at the most popular container technology, Docker, to see how con‐

tainers can store data. The key storage concept in Docker is the volume. From the

perspective of a Docker container, a volume is a directory that can support read-only

or read-write access. Docker supports the mounting of multiple different data stores

as volumes. We’ll introduce several options so we can later note their equivalents in

Kubernetes.

Bind mounts

The simplest approach for creating a volume is to bind a directory in the container to

a directory on the host system. This is called a bind mount, as shown in Figure 2-2.

Docker, Containers, and State | 27

Figure 2-2. Using Docker Bind Mounts to access the host lesystem

When starting a container within Docker, you specify a bind mount with the --

volume or -v option and the local filesystem path and container path to use. For

example, you could start an instance of the Nginx web server, and map a local project

folder from your development machine into the container. This is a command you

can test out in your own environment if you have Docker installed:

docker run -it --rm -d --name web -v ~/site-content:/usr/share/nginx/html nginx

If the local path directory does not already exist, the Docker runtime will create it.

Docker allows you to create bind mounts with read-only or read-write permissions.

Because the volume is represented as a directory, the application running in the con‐

tainer can put anything that can be represented as a file into the volume - even a data‐

base.

Bind mounts are quite useful for development work. However, using bind mounts is

not suitable for a production environment since this leads to a container being

dependent on a file being present in a specific host. This might be fine for a single

machine deployment, but production deployments tend to be spread across multiple

hosts. Another concern is the potential security hole that is presented by opening up

access from the container to the host filesystem. For these reasons, we need another

approach for production deployments.

Volumes

The preferred option within Docker is to use volumes. Docker volumes are created

and managed by Docker under a specific directory on the host filesystem. The

Docker volume create command is used to create a volume. For example, you might

create a volume called site-content to store files for a website:

28 | Chapter 2: Managing Data Storage on Kubernetes

docker volume create site-content

If no name is specified, Docker assigns a random name. After creation, the resulting

volume is available to mount in a container using the form -v VOLUME-

NAME:CONTAINER-PATH. For example, you might use a volume like the one just created

to allow an Nginx container to read the content, while allowing another container to

edit the content, using the to option:

docker run -it --rm -d --name web -v site-content:/usr/share/nginx/html:ro nginx

Note: Docker Volume mount syntax

Docker also supports a --mount syntax which allows you to specify

the source and target folders more explicitly. This notation is con‐

sidered more modern, but it is also more verbose. The syntax

shown above is still valid and is the more commonly used syntax.

As implied above, a Docker volume can be mounted in more than one container at

once, as shown in Figure 2-3.

Figure 2-3. Creating Docker Volumes to share data between containers on the host

The advantage of using Docker volumes is that Docker manages the filesystem access

for containers, which makes it much simpler to enforce capacity and security restric‐

tions on containers.

Tmpfs Mounts

Docker supports two types of mounts that are specific to the operating system used

by the host system: tmpfs (or “temporary filesystem”) and named pipes. Named pipes

are available on Docker for Windows, but since they are typically not used in K8s, we

won’t give much consideration to them here.

Docker, Containers, and State | 29

Tmpfs mounts are available when running Docker on Linux. A tmpfs mount exists

only in memory for the lifespan of the container, so the contents are never present on

disk, as shown in Figure 2-4. Tmpfs mounts are useful for applications that are writ‐

ten to persist a relatively small amount of data, especially sensitive data that you don’t

want written to the host filesystem. Because the data is stored in memory, there is a

side benefit of faster access.

Figure 2-4. Creating a temporary volume using Docker tmpfs

To create a tmpfs mount, you use the docker run --tmpfs option. For example, you

could use a command like this to specify a tmpfs volume to store Nginx logs for a

webserver processing sensitive data:

docker run -it --rm -d --name web -tmpfs /var/log/nginx nginx

The --mount option may also be used for more control over configurable options.

Volume Drivers

The Docker Engine has an extensible architecture which allows you to add custom‐

ized behavior via plugins for capabilities including networking, storage, and authori‐

zation. Third-party storage plugins are available for multiple open-source and

commercial providers, including the public clouds and various networked file sys‐

tems. Taking advantage of these involves installing the plugin with Docker engine

and then specifying the associated volume driver when starting Docker containers

using that storage, as shown in Figure 2-5.

30 | Chapter 2: Managing Data Storage on Kubernetes

Figure 2-5. Using Docker Volume Drivers to access networked storage

For more information on working with the various types of volumes supported in

Docker, see the Docker Storage documentation, as well as the documentation for the

docker run command.

Sidebar: File, Block, and Object Storage

In our modern era of cloud architectures, the three main formats in which storage is

traditionally provided to applications are files, blocks, and objects. Each of these store

and provide access to data in different ways.

• File storage represents data as a hierarchy of folders, each of which can contain

files. The file is the basic unit of access for both storage and retrieval. The root

directory that is to be accessed by a container is mounted into the container file‐

system such that it looks like any other directory. Each of the public clouds pro‐

vides their own file storage, for example Google Cloud Filestore, or Amazon

Elastic Filestore. Gluster is an open-source distributed file system. Many of these

systems are compatible with the Network File System (NFS), a distributed file

system protocol invented at Sun Microsystems dating back to 1984 that is still in

common use.

• Block storage organizes data in chunks and allocates those chunks across a set of

managed volumes. When you provide data to a block storage system, it divides it

up into chunks of varying sizes and distributes those chunks in order to use the

Docker, Containers, and State | 31

underlying volumes the most efficiently. When you query a block storage system,

it retrieves the chunks from their various locations and provides the data back to

you. This flexibility makes block storage a great solution when you have a hetero‐

geneous set of storage devices available. Block storage doesn’t provide a lot of

metadata handling, which can place more burden on the application.

•

Object storage organizes data in units known as objects. Each object is referenced

by a unique identifier or “key”, and can support rich metadata tagging that ena‐

bles searching. Objects are organized in buckets. This flat, non-hierarchical orga‐

nization makes object storage easy to scale. Amazon’s Simple Storage Service (S3)

is the canonical example of object storage and most object storage products will

claim compatibility with the S3 API.

If you’re tasked with building or selecting data infrastructure, you’ll want to under‐

stand the strengths and weaknesses of each of these patterns.

Kubernetes Resources for Data Storage

Now that you understand basic concepts of container and cloud storage, let’s see what

Kubernetes brings to the table. In this section, we’ll introduce some of the key Kuber‐

netes concepts or “resources” in the API for attaching storage to containerized appli‐

cations. Even if you are already somewhat familiar with these resources, you’ll want

to stay tuned, as we’ll take a special focus on how each one relates to stateful data.

Pods and Volumes

One of the first Kubernetes resources new users encounter is the pod. The pod is the

basic unit of deployment of a Kubernetes workload. A pod provides an environment

for running containers, and the Kubernetes control plane is responsible for deploying

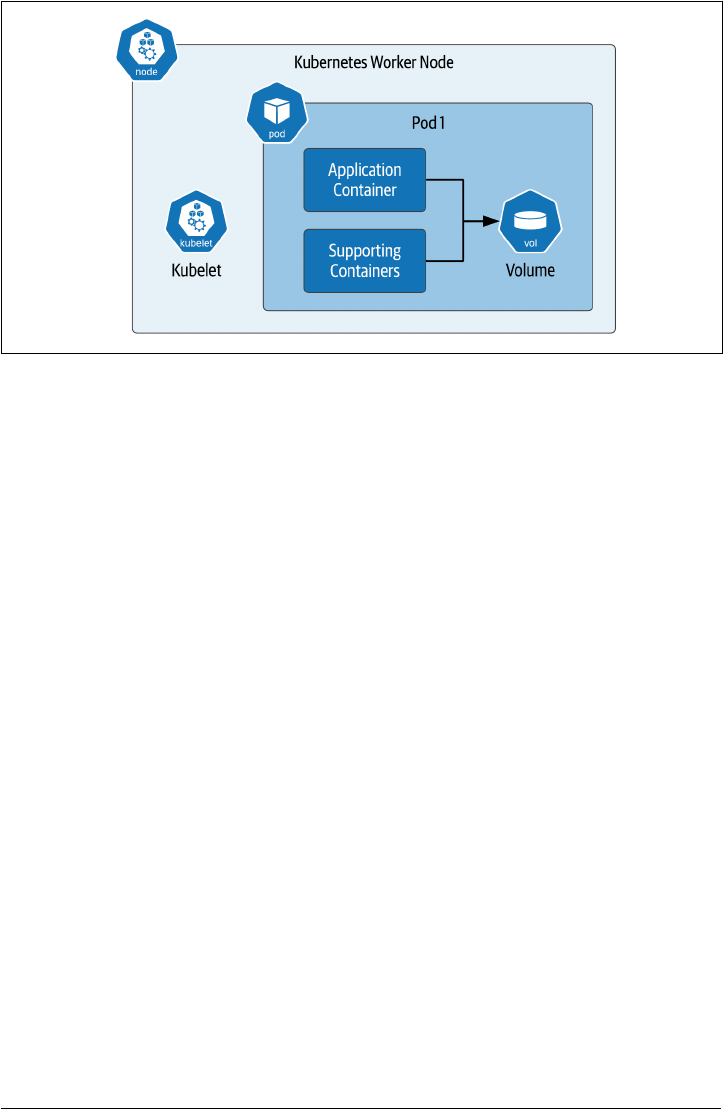

pods to Kubernetes worker nodes. The Kubelet is a component of the Kubernetes

control plane that runs on each worker node. It is responsible for running pods on a

node, as well as monitoring the health of these pods and the containers inside them.

These elements are summarized in Figure 2-6.

While a pod can contain multiple containers, the best practice is for a pod to contain

a single application container, along with optional additional helper containers, as

shown in the figure. These helper containers might include init containers that run

prior to the main application container in order to perform configuration tasks, or

sidecar containers that run alongside the main application container to provide

helper services such as observability or management. In future chapters we’ll demon‐

strate how data infrastructure deployments can take advantage of these architectural

patterns.

32 | Chapter 2: Managing Data Storage on Kubernetes

Figure 2-6. Using Volumes in Kubernetes Pods

Now let’s consider how persistence is supported within this pod architecture. As with

Docker, the “on disk” data in a container is lost when a container crashes. The kubelet

is responsible for restarting the container, but this new container is really a replace‐

ment for the original container - it will have a distinct identity, and start with a com‐

pletely new state.

In Kubernetes, the term volume is used to represent access to storage within a pod. By

using a volume, the container has the ability to persist data that will outlive the con‐

tainer (and potentially the pod as well, as we’ll see shortly). A volume may be accessed

by multiple containers in a pod. Each container has its own volumeMount within the

pod that specifies the directory to which it should be mounted, allowing the mount

point to differ between containers.

There are multiple cases where you might want to share data between multiple con‐

tainers in a pod:

• An init container creates a custom configuration file for the particular environ‐

ment that the application container mounts in order to obtain configuration val‐

ues.

• The application pod writes logs, and a sidecar pod reads those logs to identify

alert conditions that are reported to an external monitoring tool.

However, you’ll likely want to avoid situations in which multiple containers are writ‐

ing to the same volume, because you’ll have to ensure the multiple writers don’t con‐

flict - Kubernetes does not do that for you.

Kubernetes Resources for Data Storage | 33

Note: Preparing to run sample code

The examples in this chapter (and the rest of the book) assume you

have access to a running Kubernetes cluster. For the examples in

this chapter, a development cluster on your local machine such as

Kind, K3s, or Docker Desktop should be sufficient. The source

code used in this section is located at Kubernetes Storage Examples.

apiVersion: v1

kind: Pod

metadata:

name: my-pod

spec:

containers:

- name: my-app

image: nginx

volumeMounts:

- name: web-data

mountPath: /app/config

volumes:

- name: web-data

Notice the two parts of the configuration: the volume is defined under spec.volumes,

and the usage of the volumes is defined under spec.containers.volumeMounts. First,

the name of the volume is referenced under the volumeMounts, and the directory

where it is to be mounted is specified by the mountPath. When declaring a pod speci‐

fication, volumes and volume mounts go together. For your configuration to be valid,

a volume must be declared before being referenced, and a volume must be used by at

least one container in the pod.

You may have also noticed that the volume only has a name. You haven’t specified any

additional information. What do you think this will do? You could try this out for

yourself by using the example source code file nginx-pod.yaml or cutting and pasting

the configuration above to a file with that name, and executing the kubectl command

against a configured Kubernetes cluster:

kubectl apply -f nginx-pod.yaml

You can get more information about the pod that was created using the kubectl get

pod command, for example:

kubectl get pod my-pod -o yaml | grep -A 5 " volumes:”

And the results might look something like this:

volumes:

- emptyDir: {}

name: web-data

- name: default-token-2fp89

34 | Chapter 2: Managing Data Storage on Kubernetes

secret:

defaultMode: 420

As you can see, Kubernetes supplied some additional information when creating the

requested volume, defaulting it to a type of emptyDir. Other default attributes may

differ depending on what Kubernetes engine you are using and we won’t discuss them

further here.

There are several different types of volumes that can be mounted in a container, let’s

have a look.

Ephemeral volumes

You’ll remember tmpfs volumes from our discussion of Docker volumes above,

which provide temporary storage for the lifespan of a single container. Kubernetes

provides the concept of an ephemeral volumes, which is similar, but at the scope of a

pod. The emptyDir introduced in the example above is a type of ephemeral volume.

Ephemeral volumes can be useful for data infrastructure or other applications that

want to create a cache for fast access. Although they do not persist beyond the life‐

span of a pod, they can still exhibit some of the typical properties of other volumes

for longer-term persistence, such as the ability to snapshot. Ephemeral volumes are

slightly easier to set up than PersistentVolumes because they are declared entirely

inline in the pod definition without reference to other Kubernetes resources. As you

will see below, creating and using PersistentVolumes is a bit more involved.

Note: Other ephemeral storage providers

Some of the in-tree and CSI storage drivers we’ll discuss below that

provide PersistentVolumes also provide an ephemeral volume

option. You’ll want to check the documentation of the specific pro‐

vider in order to see what options are available.

Conguration volumes

Kubernetes provides several constructs for injecting configuration data into a pod as a

volume. These volume types are also considered ephemeral in the sense that they do

not provide a mechanism for allowing applications to persist their own data.

These volume types are relevant to our exploration in this book since they provide a

useful means of configuring applications and data infrastructure running on Kuber‐

netes. We’ll describe each of them briefly:

CongMap Volumes

A ConfigMap is a Kubernetes resource that is used to store configuration values

external to an application as a set of name-value pairs. For example, an applica‐

tion might require connection details for an underlying database such as an IP

Kubernetes Resources for Data Storage | 35

address and port number. Defining these in a ConfigMap is a good way to exter‐

nalize this information from the application. The resulting configuration data

can be mounted into the application as a volume, where it will appear as a direc‐

tory. Each configuration value is represented as a file, where the filename is the

key, and the contents of the file contain the value. See the Kubernetes documen‐

tation for more information on mounting ConfigMaps as volumes.

Secret Volumes

A Secret is similar to a ConfigMap, only it is intended for securing access to sen‐

sitive data that requires protection. For example, you might want to create a

secret containing database access credentials such as a username and password.

Configuring and accessing Secrets is similar to using ConfigMap, with the addi‐

tional benefit that Kubernetes helps decrypt the secret upon access within the

pod. See the Kubernetes documentation for more information on mounting

Secrets as volumes.

Downward API Volumes

The Kubernetes Downward API exposes metadata about pods and containers,

either as environment variables or as volumes. This is the same metadata that is

used by kubectl and other clients.

The available pod metadata includes the pod’s name, ID, namespace, labels, and

annotations. The containerized application might wish to use the pod informa‐

tion for logging and metrics reporting, or to determine database or table names.

The available container metadata includes the requested and maximum amounts

of resources such as CPU, memory, and ephemeral storage. The containerized

application might wish to use this information in order to throttle its own

resource usage. See the Kubernetes documentation for an example of injecting

pod information as a volume.

Hostpath volumes

A hostPath volume mounts a file or directory into a pod from the Kubernetes worker

node where it is running. This is analogous to the bind mount concept in Docker dis‐

cussed above. Using a hostPath volume has one advantage over an emptyDir volume:

the data will survive the restart of a pod.

However, there are some disadvantages to using hostPath volumes. First, in order for

a replacement pod to access the data of the original pod, it will need to be restarted

on the same worker node. While Kubernetes does give you the ability to control

which node a pod is placed on using affinity, this tends to constrain the Kubernetes

scheduler from optimal placement of pods, and if the node goes down for some rea‐

son, the data in the hostPath volume is lost. Second, similar to Docker bind mounts,

there is a security concern with hostPath volumes in terms of allowing access to the

36 | Chapter 2: Managing Data Storage on Kubernetes

local filesystem. For these reasons, hostPath volumes are only recommended for

development deployments.

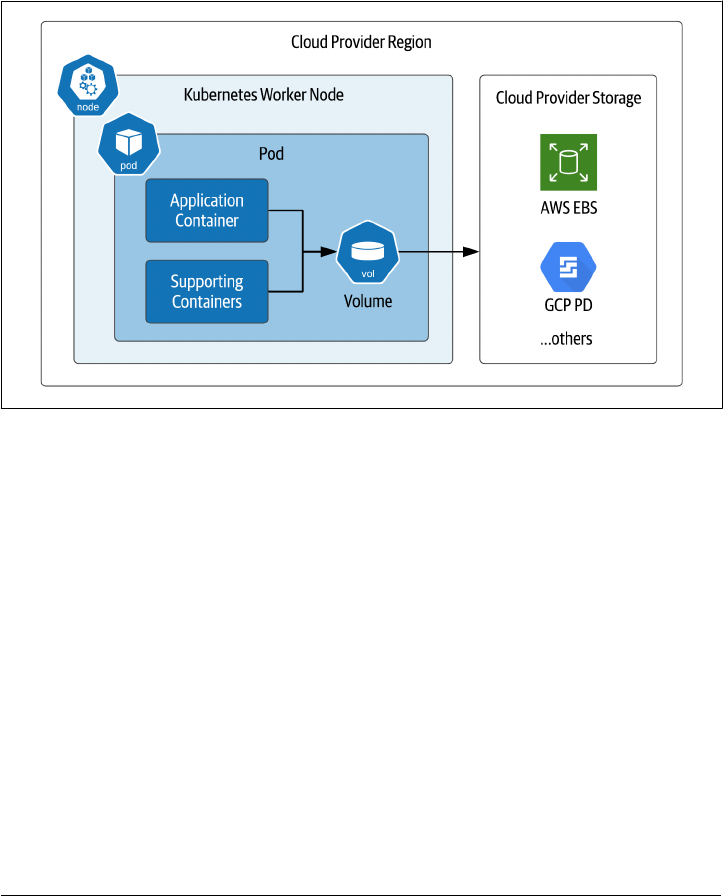

Cloud Volumes

It is possible to create Kubernetes volumes that reference storage locations beyond just the

worker node where a pod is running, as shown in Figure 2-7. These can be grouped into volume

types that are provided by named cloud providers, and those that attempt to provide a more

generic interface. .

Figure 2-7. Kubernetes pods directly mounting cloud provider storage

.

These include the following:

• The awsElasticBlockStore volume type is used to mount volumes on Amazon

Web Services (AWS) Elastic Block Store (EBS). Many databases use block storage

as their underlying storage layer.

• The gcePersistentDisk volume type is used to mount Google Compute Engine

(GCE) persistent disks (PD), another example of block storage.

• Two types of volumes are supported for Microsoft Azure: azureDisk for Azure

Disk Volumes, and azureFile for Azure File Volumes

• For OpenStack deployments, the cinder volume type can be used to access Open‐

Stack Cinder volumes

Usage of these types typically requires configuration on the cloud provider, and

access from Kubernetes clusters is typically confined to storage in the same cloud

Kubernetes Resources for Data Storage | 37

region and account. Check your cloud provider’s documentation for additional

details.

Additional Volume Providers

There are a number of additional volume providers that vary in the types of storage

provided. Here are a few examples:

• The fibreChannel volume type can be used for SAN solutions implementing the

FibreChannel protocol.

•

The gluster volume type is used to access file storage using the Gluster dis‐

tributed file system referenced above

• An iscsi volume mounts an existing iSCSI (SCSI over IP) volume into your Pod.

• An nfs volume allows an existing NFS (Network File System) share to be moun‐

ted into a Pod

We’ll examine more volume providers below that implement the Container Attached

Storage pattern.

Table 2-1 provides a comparison of Docker and Kubernetes storage concepts we’ve

covered so far.

Table 2-1. Table 2-1: Comparing Docker and Kubernetes storage options

Type of Storage Docker Kubernetes

Access to persistent storage from various providers Volume (accessed via

Volume drivers)

Volume (accessed via in-tree or CSI

drivers)

Access to host

lesystem (not recommended for

production)

Bind mount Hostpath volume

Temporary storage available while container (or pod) is

running

tmpfs emptyDir and other ephemeral

volumes

Conguration

and environment data (read-only)

(no direct equivalent) CongMap,

Secret, Downward API

Sidebar: How do you choose a Kubernetes storage solution?

Given the number of storage options available, it can certainly be an intimidating task

to try to determine what kind of storage you should use for your application. Along

with determining whether you need file, block, or object storage, you’ll want to con‐

sider your latency and throughput requirements, as well as your expected storage vol‐

ume. For example, If your read latency requirements are aggressive, you’ll most likely

need a storage solution that keeps data in the same data center where it is accessed.

Next, you’ll want to consider any existing commitments or resources you have. Per‐

haps your organization has a mandate or bias toward using services from a preferred

cloud provider. The cloud providers will frequently provide cost incentives for using

38 | Chapter 2: Managing Data Storage on Kubernetes

their services, but you’ll want to trade this against the risk of lock-in to a specific ser‐

vice. Alternatively, you might have an investment in a storage solution in an on-

premises data center that you need to leverage.

Overall, cost tends to be the overriding factor in choosing storage solutions, espe‐

cially over the long term. Make sure your modeling includes not only the cost of the

physical storage and any managed services, but also the operational cost involved in

managing your chosen solution.

In this section, we’ve discussed how to use volumes to provide storage that can be

shared by multiple containers within the same pod. While this is sufficient for some

use cases, there are some needs this doesn’t address. A volume does not provide the

ability to share storage resources between pods. The definition of a particular storage

location is tied to the definition of the pod. Managing storage for individual pods

doesn’t scale well as the number of pods deployed in your Kubernetes cluster increa‐

ses.

Thankfully, Kubernetes provides additional primitives that help simplify the process

of provisioning and mounting storage volumes for both individual pods and groups

of related pods. We’ll investigate these concepts in the next several sections.

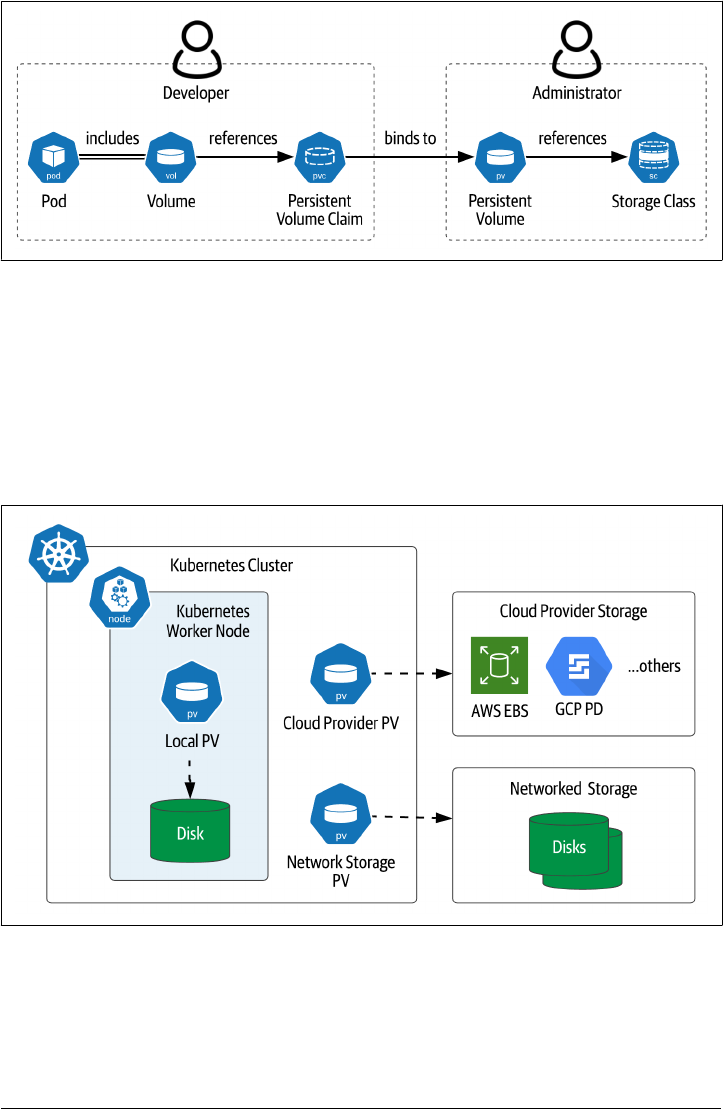

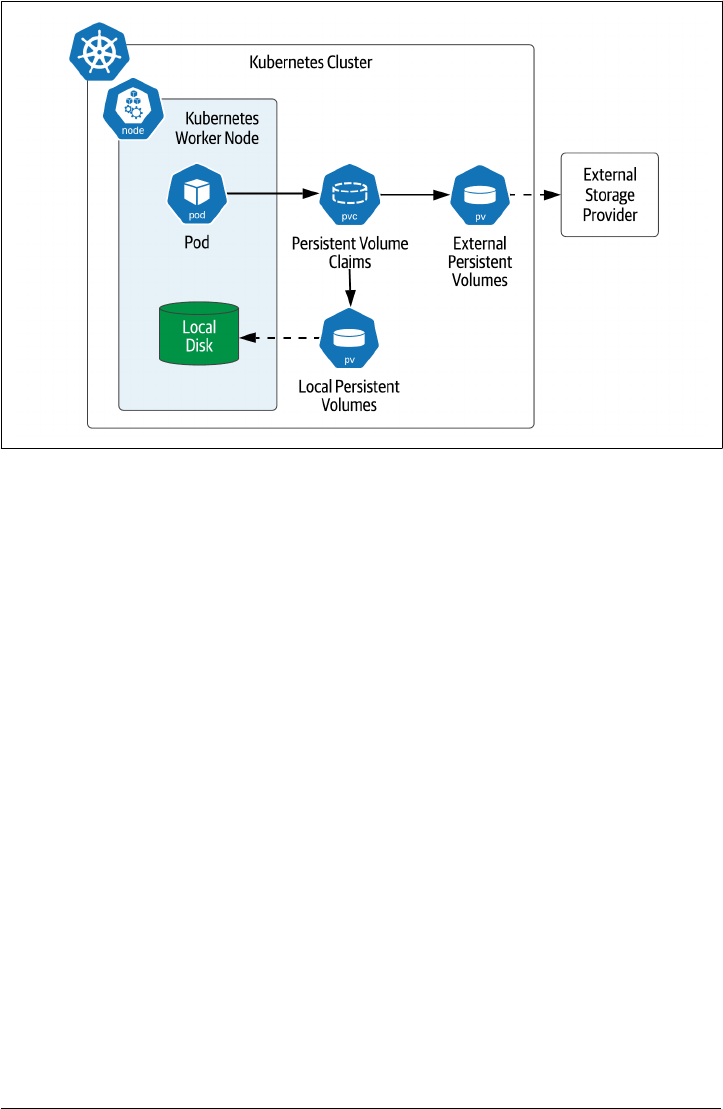

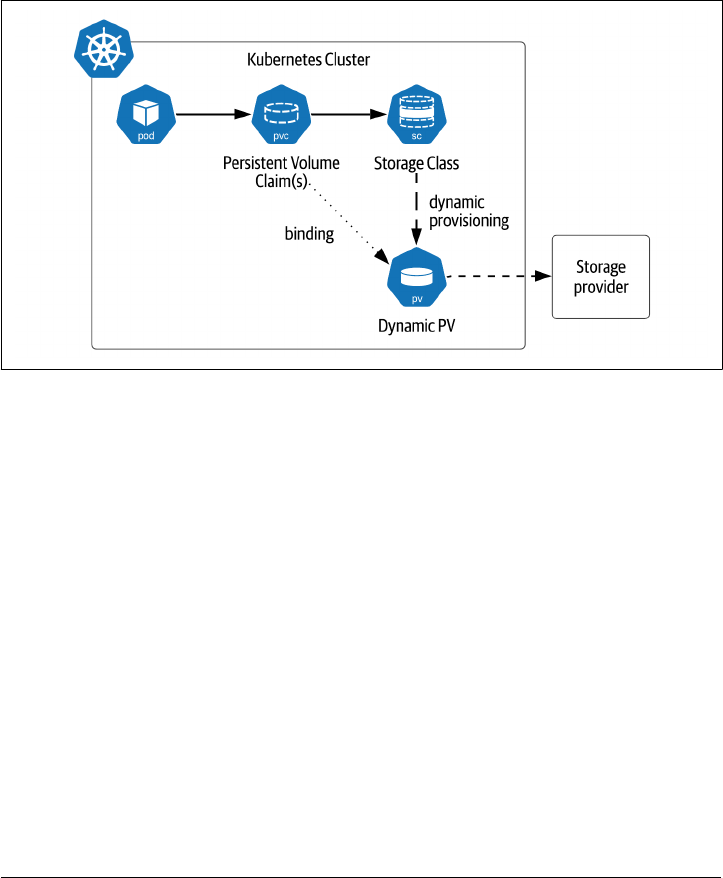

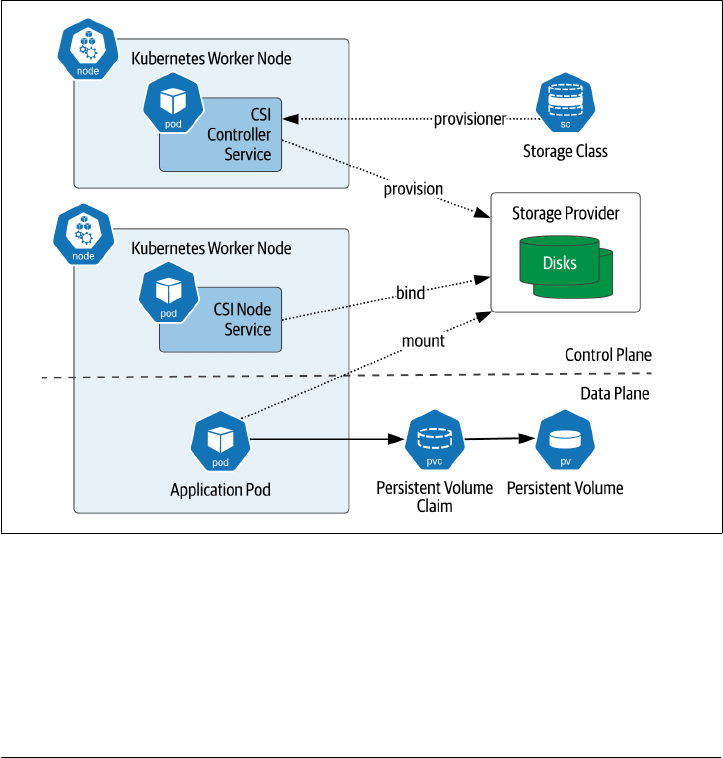

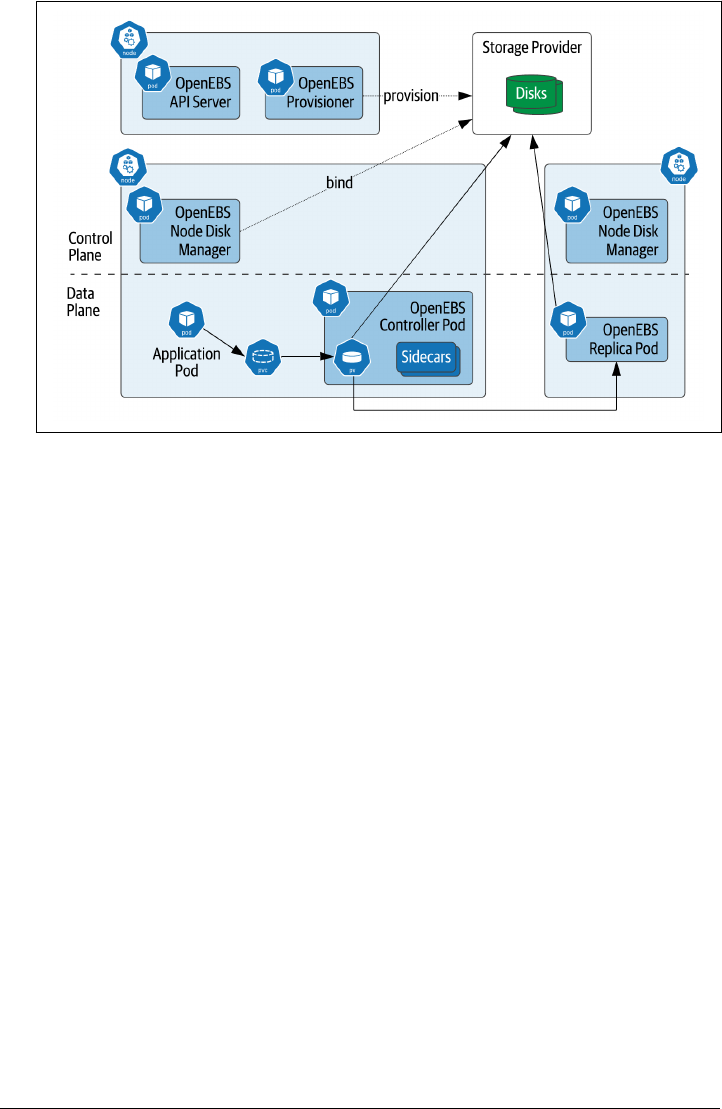

PersistentVolumes

The key innovation the Kubernetes developers have introduced for managing storage

is the persistent volume subsystem. This subsystem consists of three additional

Kubernetes resources that work together: PersistentVolumes, PersistentVolume‐

Claims, and StorageClasses. This allows you to separate the definition and lifecycle of

storage from how it is used by pods, as shown in Figure 2-8:

•

Cluster administrators define PersistentVolumes, either explicitly or by creating a

StorageClass that can dynamically provision new PersistentVolumes.

• Application developers create PersistentVolumeClaims that describe the storage

resource needs of their applications, and these PersistentVolumeClaims can be

referenced as part of volume definitions in pods.

•

The Kubernetes control plane manages the binding of PersistentVolumeClaims

to PersistentVolumes.

Kubernetes Resources for Data Storage | 39

Figure 2-8. PersistentVolumes, PersistentVolumeClaims, and StorageClasses

Let’s look first at the PersistentVolume resource (often abbreviated PV), which